TimeWarp

Create an app to variably time scale video

Originally published • Last updatedVariably speed up or slow down the rate of play of video across its timeline.

TimeWarp

This project implements a method that variably scales video and audio in the time domain. This means that the time intervals between video and audio samples are variably scaled along the timeline of the video.

A discussion about uniformly scaling video files is in the ScaleVideo blog post from which this project is derived.

Variable time scaling is interpreted as a function on the unit interval [0,1] that specifies the instantaneous time scale factor at each time in the video, with video time mapped to the unit interval with division by its duration. It will be referred to as the instantaneous time scale function. The values v of the instantaneous time scale function will contract or expand infinitesimal time intervals dt variably across the duration of the video as v * dt.

In this way the absolute time scale at any particular time t is the sum of all infinitesimal time scaling up to that time, or the definite integral of the instantaneous scaling function from 0 to t.

The associated Xcode project implements a SwiftUI app for macOS and iOS that variably scales video files stored on your device or iCloud.

A default video file is provided to set the initial state of the app.

After a video is imported it is displayed in the VideoPlayer where it can be viewed, along with its variably scaled counterpart.

Select the scaling type and its parameters using sliders and popup menu.

Classes

The project is comprised of:

- ScaleVideoApp - The App for import, scale and export.

- ScaleVideoObservable - An ObservableObject that manages the user interaction to scale and play video files.

- ScaleVideo - The AVFoundation, vDSP and Quadrature code that reads, scales and writes video files.

1. ScaleVideoApp

The user interface of this app is similar to ScaleVideo with several new user interface items:

New UI

- Scale Function: Popup menu for choosing an instantaneous time scale function.

- Modifier: Additional slider to pick a modifier parameter for that function, if applicable.

- Plot: PlotView for visualizing the current time scaling function.

- Frame Rate: A new option

Anyhas been added to support variable rate video.

1. Scale Function: Popup menu for choosing an instantaneous time scale function.

Variable scaling is defined by a function on the unit interval, called the instantaneous time scale function, that specifies the local scaling factor at each point in the video.

The app provides several built-in time scale functions identified by an enumeration:

enum ScaleFunctionType: String, CaseIterable, Identifiable {

case doubleSmoothstep = "Double Smooth Step"

case triangle = "Triangle"

case cosine = "Cosine"

case taperedCosine = "Tapered Cosine"

case constant = "Constant"

case power = "Power"

var id: Self { self }

}

The value for the current scaling function is stored in the property scalingType:

@Published var scalingType:ScaleFunctionType = .doubleSmoothstep

And selected from a popup menu:

Picker("Scaling", selection: $scaleVideoObservable.scalingType) {

ForEach(ScaleFunctionType.allCases) { scalingType in

Text(scalingType.rawValue.capitalized)

}

}

The built-in time scale functions are defined in a new file UnitFunctions.swift along with other mathematical functions for plotting and integration.

The time scale functions have two associated parameters called factor and modifier.

The factor Slider sets the same ScaleVideoObservable property as in ScaleVideo:

@Published var factor:Double = 1.5 // 0.1 to 2

It is set by the same slider control:

VStack {

Text(String(format: "%.2f", scaleVideoObservable.factor))

.foregroundColor(isEditing ? .red : .blue)

Slider(

value: $scaleVideoObservable.factor,

in: 0.1...2

) {

Text("Factor")

} minimumValueLabel: {

Text("0.1")

} maximumValueLabel: {

Text("2")

} onEditingChanged: { editing in

isEditing = editing

}

}

In ScaleVideo factor is the uniform scaling factor across the whole video. In this project factor usage varies with the scaling function but takes on its original meaning with the constant time scaling function.

2. Modifier: Additional slider to pick a modifier parameter for that function, if applicable.

The modifier provides values of other time scaling function parameters:

- Frequency of a cosine function

- Coefficient of a power function

- Width of intervals for a piecewise function

VStack {

Text(String(format: "%.2f", scaleVideoObservable.modifier))

.foregroundColor(isEditing ? .red : .blue)

Slider(

value: $scaleVideoObservable.modifier,

in: 0.1...1

) {

Text("Modifier")

} minimumValueLabel: {

Text("0.1")

} maximumValueLabel: {

Text("1")

} onEditingChanged: { editing in

isEditing = editing

}

Text("See plot above to see effect of modifier on it.")

.font(.caption)

.padding()

}

Instantaneous Time Scaling Functions

The built-in time scaling functions that exist on the unit interval [0,1] are defined in the file UnitFunctions.swift. Use the scaling type popup menu to switch among them.

Constant Function

Back to Time Scaling Functions

In the constant function the factor takes on its previous meaning in ScaleVideo as a uniform time scale factor across the video:

func constant(_ t:Double, factor:Double) -> Double {

return factor

}

constant usage:

scalingFunction = {t in constant(t, factor: self.factor)}

Triangle Function

Back to Time Scaling Functions

The triangle is a piecewise function of lines:

func triangle(_ t:Double, from:Double = 1, to:Double = 2, range:ClosedRange<Double> = 0.2...0.8) -> Double

The plot is a triangle with a sharp peak height (or valley depth) specified by the to argument and a base on an interval specified by the range argument.

triangle usage:

scalingFunction = {t in triangle(t, from: 1, to: self.factor, range: c-w...c+w) }

For the triangle function the to argument is set to the value of the factor slider and the modifier is used to define the width of the base of the triangle with a ClosedRange range:

let c = 1/2.0

let w = c * self.modifier

scalingFunction = {t in triangle(t, from: 1, to: self.factor, range: c-w...c+w) }

Cosine Function

Back to Time Scaling Functions

In the cosine function the factor is proportional to its amplitude and offset, where offset is used to keep the values positive and away from zero, and the modifier is proportional to its frequency:

func cosine(_ t:Double, factor:Double, modifier:Double) -> Double {

factor * (cos(12 * modifier * .pi * t) + 1) + (factor / 2)

}

cosine usage:

scalingFunction = {t in cosine(t, factor: self.factor, modifier: self.modifier) }

Tapered Cosine Function

Back to Time Scaling Functions

The tapered cosine function is a blend two function f and g with a function s that smoothly transitions from 0 to 1 with the expression f + (g - f) * s, where f = 1, g = cosine:

This is a function that uses a smoothstep function to transition between a constant function and a cosine function.

func tapered_cosine(_ t:Double, factor:Double, modifier:Double) -> Double {

1 + (cosine(t, factor:factor, modifier:modifier) - 1) * smoothstep_on(0, 1, t)

}

tapered_cosine usage:

scalingFunction = {t in tapered_cosine(t, factor: self.factor, modifier: self.modifier) }

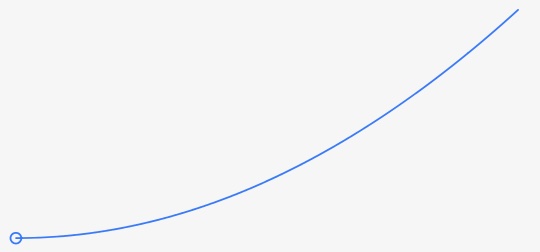

Power Function

Back to Time Scaling Functions

The factor is the exponent of the variable, and the modifier is proportional to its scaling and offset, where offset is used to keep the values positive and away from zero:

func power(_ t:Double, factor:Double, modifier:Double) -> Double {

return 2 * modifier * pow(t, factor) + (modifier / 2)

}

power usage:

scalingFunction = {t in power(t, factor: self.factor, modifier: self.modifier) }

Double Smoothstep Function

Back to Time Scaling Functions

This is also a piecewise function made with constant and smoothstep functions:

func double_smoothstep(_ t:Double, from:Double = 1, to:Double = 2, range:ClosedRange<Double> = 0.2...0.4) -> Double

The plot of this function is a smooth transition to a peak height (or valley depth) specified by the to argument and two smoothsteps on an interval specified by the range argument.

double_smoothstep usage:

scalingFunction = {t in double_smoothstep(t, from: 1, to: self.factor, range: c-w...c+w) }

For the double_smoothstep function the to argument is set to the value of the factor slider and the modifier is used to define the width of the base of the smoothsteps with a ClosedRange range:

let c = 1/4.0

let w = c * self.modifier

scalingFunction = {t in double_smoothstep(t, from: 1, to: self.factor, range: c-w...c+w) }

Your Function

Back to Time Scaling Functions

Things to consider for your own time scaling functions:

Time scale function values, which are scale factors, should not be too large or too small. Extreme scaling can produce very high or low frames rates, and frame rate can adversely affect video compression quality. Video with very high frame rate may be unintentionally played as a slow motion video.

Negative and zero values are okay provided the definite integral for the actual scale factor is always positive. Faulty scaling factors can cause out of order video frame times in which a frame time is less than or equal to the time of its predecessor and video generation will fail.

For a variable rate video the scaled video frame times can be compressed so that they are very close in time. Scaled frame times are floating point numbers that will be approximated by CMTime, a rational number. Therefore the error in the approximation should be smaller than the actual distance between the scaled times. Rational approximation explains in more depth.

3. Plot: PlotView for visualizing the current time scaling function.

The new PlotView displays a curve plot of the current time scale function, animates the effect of changes to the parameters factor and modifier on its shape, and includes an animated timeline position indicator that is synced to the video player time.

The ScaleVideoObservable handles generating the Path using various functions defined in the UnitFunctions file.

The path is stored in the published property for updates:

@Published var scalingPath

And drawn in the view with stroke:

scaleVideoObservable.scalingPath

.stroke(Color.blue, style: StrokeStyle(lineWidth: 1, lineCap: .round, lineJoin: .round))

.scaleEffect(CGSize(width: 0.9, height: 0.9))

The plot is updated in response to changes such as:

$factor.sink { _ in

DispatchQueue.main.async {

self.updatePath()

}

}

.store(in: &cancelBag)

$modifier.sink { _ in

DispatchQueue.main.async {

self.updatePath()

}

}

.store(in: &cancelBag)

The plot has a caption that lists the maximum and minimum values and the expected duration of the time scaled video.

Text("Time Scale on [0,1] to [\(String(format: "%.2f", scaleVideoObservable.minimum_y)), \(String(format: "%.2f", scaleVideoObservable.maximum_y))]\nExpected Scaled Duration: \(scaleVideoObservable.expectedScaledDuration)")

.font(.caption)

.padding()

4. Frame Rate: A new option Any has been added to support variable rate video.

When the ‘Any` option is selected the video writer will output sample buffers whose presentation times match the time scaled presentation times of the original sample buffers without resampling to a fixed rate. In this way the resulting video will have a variable frame rate.

Extreme scaling can produce high frame rates. See the discussion on rational approximation about quantization errors that can adversely affect high frame rates.

The new option to allow for variable rate video Any has been added to the segmented control for frame rate:

Picker("Frame Rate", selection: $scaleVideoObservable.fps) {

Text("24").tag(FPS.twentyFour)

Text("30").tag(FPS.thirty)

Text("60").tag(FPS.sixty)

Text("Any").tag(FPS.any)

}

.pickerStyle(.segmented)

And an entry any = 0 to the enumeration for FPS:

enum FPS: Int, CaseIterable, Identifiable {

case any = 0, twentyFour = 24, thirty = 30, sixty = 60

var id: Self { self }

}

The estimated frame rate for the variable frame rate option is included in the caption for the plot: it is the average frame rate of the video when it is not resampled to one of the fixed rates of 24, 30 or 60:

let estimatedFrameCount = videoAsset.estimatedFrameCount()

let estimatedFrameRate = Double(estimatedFrameCount) / scaledDuration

2. ScaleVideoObservable

The observable object ScaleVideoObservable of this app is fundamentally the same as that in ScaleVideo with new features added for variably scaling video.

Refer to ScaleVideo for a discussion on the details of this class not covered here, which include the following primary methods it implements:

A. loadSelectedURL - loads the video file selected by user to import.

B. scale - scales the selected video.

C. prepareToExportScaledVideo - creates a VideoDocument to save the scaled video.

The discussion here focuses on how the ScaleVideo class in ScaleVideo has been extended for variably scaling video.

New Features

- Integrator: Define the definite integral of the instantaneous time scale function currently chosen.

- Plotter: Manage the plot of the current time scaling function.

1. Define the definite integral of the instantaneous time scale function currently chosen.

As in ScaleVideo scaling is performed by the scale method that creates and runs a ScaleVideo object.

The ScaleVideo initializer init has a notable new argumemt integrator in place of the previous desiredDuration:

init?(path : String, frameRate: Int32, destination: String, integrator:@escaping (Double) -> Double, progress: @escaping (CGFloat, CIImage?) -> Void, completion: @escaping (URL?, String?) -> Void)

Arguments:

-

path: String - The path of the video file to be scaled.

-

frameRate: Int32 - The desired frame rate of the scaled video. Specify 0 for variable rate.

-

destination: String - The path of the scaled video file.

-

integrator: Closure - A function that is the definite integral of the instantaneous time scale function on the unit interval [0,1].

-

progress: Closure - A handler that is periodically executed to send progress images and values.

-

completion: Closure - A handler that is executed when the operation has completed to send a message of success or not.

Variable frame rate is achieved by setting the frameRate argument to 0. Each video frame presentation time in the scaled video is set to the exact scaled value of the original presentation time without any resampling to a fixed rate.

Check out the suggestions for your function when defining an instantaneous time scale function.

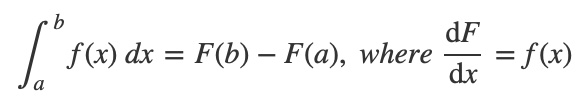

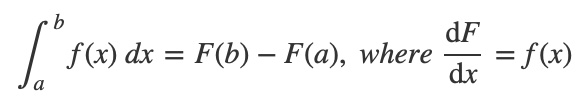

The integrator can be defined as a definite integral or equivalently with an antiderivative because of the Fundamental Theorem of Calculus:

F is the antiderivative of f, i.e. its derivative is f.

Examples are provided in the code, see ScaleVideoApp.swift, where the integrator is defined both as definite integrals and antiderivatives.

Read the discussion in the mathematical justification below for more about how integration for time scaling works.

Differences with ScaleVideo:

- The

desiredDurationargument was removed. - A new argument

integratorhas been added.

In ScaleVideo the desired duration of the scaled video was determined by the selected scale factor, multiplying the video duration in seconds by the factor:

let desiredDuration:Float64 = asset.duration.seconds * self.factor

Variable scaling is performed using the instantaneous time scale function instead.

Values of the time scaling function are the instantaneous scaling factor at every time of the video, and therefore the function is integrated to calculate the full scaling over a given interval of time [0,t].

The integrator is defined in the ScaleVideoObservable in terms of the built-in time scale functions as follows:

func integrator(_ t:Double) -> Double {

var value:Double?

switch scalingType {

case .doubleSmoothstep:

let c = 1/4.0

let w = c * self.modifier

value = integrate_double_smoothstep(t, from: 1, to: self.factor, range: c-w...c+w)

case .triangle:

let c = 1/2.0

let w = c * self.modifier

value = integrate_triangle(t, from: 1, to: self.factor, range: c-w...c+w)

case .cosine:

value = integrate(t, integrand: { t in

cosine(t, factor: self.factor, modifier: self.modifier)

})

case .taperedCosine:

value = integrate(t, integrand: { t in

tapered_cosine(t, factor: self.factor, modifier: self.modifier)

})

case .constant:

value = integrate(t, integrand: { t in

constant(t, factor: self.factor)

})

case .power:

value = integrate(t, integrand: { t in

power(t, factor: self.factor, modifier: self.modifier)

})

}

return value ?? 1

}

Numerical integration, or Quadrature, is used to calculate the integrals. In particular the Swift Overlay for Accelerate version of Quadrature discussed in the video Swift Overlay for Accelerate in WWDC 2019:

let quadrature = Quadrature(integrator: Quadrature.Integrator.nonAdaptive, absoluteTolerance: 1.0e-8, relativeTolerance: 1.0e-2)

func integrate(_ t:Double, integrand:(Double)->Double) -> Double? {

var resultValue:Double?

let result = quadrature.integrate(over: 0...t, integrand: { t in

integrand(t)

})

do {

try resultValue = result.get().integralResult

}

catch {

print("integrate error")

}

return resultValue

}

Integration of a piecewise function requires some special methods.

To that end a special form of integrate with a ClosedRange is defined:

func integrate(_ r:ClosedRange<Double>, integrand:(Double)->Double) -> Double? {

var resultValue:Double?

let result = quadrature.integrate(over: r, integrand: { t in

integrand(t)

})

do {

try resultValue = result.get().integralResult

}

catch {

print("integrate error")

}

return resultValue

}

Then the special integration methods integrate_double_smoothstep and integrate_triangle can be defined:

func integrate_double_smoothstep(_ t:Double, from:Double = 1, to:Double = 2, range:ClosedRange<Double> = 0.2...0.4) -> Double?

func integrate_triangle(_ t:Double, from:Double = 1, to:Double = 2, range:ClosedRange<Double> = 0.2...0.8) -> Double?

The integrator is then used by the ScaleVideo class to perform time scaling with the new method timeScale:

func timeScale(_ t:Double) -> Double?

{

var resultValue:Double?

resultValue = integrator(t/videoDuration)

if let r = resultValue {

resultValue = r * videoDuration

}

return resultValue

}

In particular this is how the expected duration of the scaled video can be calculated:

func updateExpectedScaledDuration() {

let videoAsset = AVAsset(url: videoURL)

let assetDurationSeconds = videoAsset.duration.seconds

let scaleFactor = integrator(1)

let scaledDuration = scaleFactor * assetDurationSeconds

expectedScaledDuration = secondsToString(secondsIn: scaledDuration)

let estimatedFrameCount = videoAsset.estimatedFrameCount()

let estimatedFrameRate = Double(estimatedFrameCount) / scaledDuration

expectedScaledDuration += " (\(String(format: "%.2f", estimatedFrameRate)) FPS)"

}

The time scale factor for the whole video is the integration of the time scale function over the whole interval [0,1]. Then the original video duration is multiplied by that factor to get the expected duration.

The estimatedFrameRate is also calculated. This is the average frame rate of the scaled video if the option for variable frame rate has been selected. Otherwise the video will be resampled to the specific frame rate selected. The choice of frame rate can affect the video compression quality.

Mathematical Justification:

The scaling function s(t) is defined on the unit interval [0,1] and used as a scale factor on the video using a linear mapping that divides time by the duration of the video D: The scale factor at video time t is s(t/D).

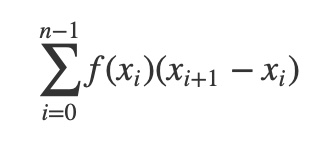

Recall that integration of a function f(x) on an interval [a,b] can be imagined as a limit of a series of summations, each called a Riemann sum, by dividing the interval into a partition of smaller sub-intervals that cover [a,b] and multiplying the width of each sub-interval dx by a value of the function f(x) in that interval:

So that when the size of the partition is iteratively increased the limit is symbolically written using the integration symbol:

Apply that idea to the scaling function for video and audio samples:

The scaled time T for a sample at time t is then the integral of the scaling function on the interval [0,t]:

Partition the interval [0,t] and for each interval in the partition scale it by multiplying its duration by a value of the time scaling function in that interval and sum them all up. In the limit as the partition size increases the sum approaches the integral of the time scaling function over that interval.

The integrator closure (Double)->Double is that definite integral.

From the Fundamental Theorem of Calculus the value of a definite integral can be determined by the antiderivative of the integrand. That is why the integrator may be expressed using the antiderivative, as some of the code examples illustrate.

However, in this app the integrator is calculated using numerical integration provided using Quadrature in the Accelerate framework.

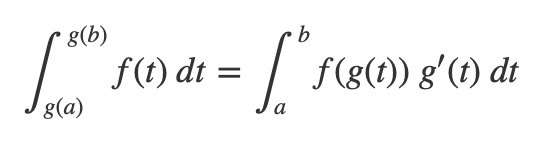

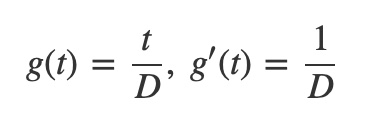

Moreover, the integration is not performed in the video time domain, but rather in the unit interval domain where the scaling functions are defined using Change of Variables for integration:

The domain mapping is given by:

So the integral can be written in the domain of the unit interval, with the left side in the video domain and the right side in the unit interval domain:

This change of variable formula is used in the implementation of the timeScale method. With D being videoDuration and f the time scaling function the time t/videoDuration is passed to the integrator as the upper limit of the integral and then the result is multiplied by the videoDuration.

2. Manage the plot of the current time scaling function.

A new property has been added to update the plots of the built-in time scale functions:

@Published var scalingPath = Path()

The plot contains a circle indicator overlay whose position on the plot is synchronized with the current play time. This helps identify the current value of the time scale function as the scaled video is playing.

This is achieved with a lookup table scalingLUT for the inverse time scale mapping, since the inverse of the time scaling method is not available in general. The lookup table maps time scaled values back to the unscaled values, i.e. it is a mapping from the scaled video timeline to the original video timeline.

The values in the scalingLUT are collected as the video frames are time scaled in copyNextSampleBufferForResampling and writeVideoOnQueueScaled:

let timeToScale:Double = presentationTimeStamp.seconds

if let presentationTimeStampScaled = self.timeScale(timeToScale) {

scalingLUT.append(CGPoint(x: presentationTimeStampScaled, y: timeToScale))

...

}

The plot is updated with the following method in response to certain state changes, such as the factor or modifier, or the video time via a periodic time observer installed on the player:

func updatePath() {

var scalingFunction:(Double)->Double

switch scalingType {

case .doubleSmoothstep:

let c = 1/4.0

let w = c * self.modifier

scalingFunction = {t in double_smoothstep(t, from: 1, to: self.factor, range: c-w...c+w) }

case .triangle:

let c = 1/2.0

let w = c * self.modifier

scalingFunction = {t in triangle(t, from: 1, to: self.factor, range: c-w...c+w) }

case .cosine:

scalingFunction = {t in cosine(t, factor: self.factor, modifier: self.modifier) }

case .taperedCosine:

scalingFunction = {t in tapered_cosine(t, factor: self.factor, modifier: self.modifier) }

case .constant:

scalingFunction = {t in constant(t, factor: self.factor)}

case .power:

scalingFunction = {t in power(t, factor: self.factor, modifier: self.modifier) }

}

var currentTime:Double = 0

if let time = self.currentPlayerTime {

if playingScaled {

if let lut = lookupTime(time) {

currentTime = lut

}

}

else {

currentTime = time / self.videoDuration

}

}

(scalingPath, minimum_y, maximum_y) = path(a: 0, b: 1, time:currentTime, subdivisions: Int(scalingPathViewFrameSize.width), frameSize: scalingPathViewFrameSize, function:scalingFunction)

updateExpectedScaledDuration()

}

The lookupTime method provides the inverse time scaling with interpolation on the values in the lookup table scalingLUT:

func lookupTime(_ time:Double) -> Double? {

guard scalingLUT.count > 0 else {

return nil

}

var value:Double?

let lastTime = scalingLUT[scalingLUT.count-1].y

// find range of scaledTime in scalingLUT, return interpolated value

for i in 0...scalingLUT.count-2 {

if scalingLUT[i].x <= time && scalingLUT[i+1].x >= time {

let d = scalingLUT[i+1].x - scalingLUT[i].x

if d > 0 {

value = ((scalingLUT[i].y + (time - scalingLUT[i].x) * (scalingLUT[i+1].y - scalingLUT[i].y) / d)) / lastTime

}

else {

value = scalingLUT[i].y / lastTime

}

break

}

}

// time may overflow end of table, use 1

if value == nil {

value = 1

}

return value

}

A periodic time observer is added to the player whenever a new one is created for a new URL to play:

func play(_ url:URL) {

if let periodicTimeObserver = periodicTimeObserver {

self.player.removeTimeObserver(periodicTimeObserver)

}

self.player.pause()

self.player = AVPlayer(url: url)

self.currentPlayerDuration = AVAsset(url: url).duration.seconds

periodicTimeObserver = self.player.addPeriodicTimeObserver(forInterval: CMTime(value: 1, timescale: 30), queue: nil) { [weak self] cmTime in

self?.currentPlayerTime = cmTime.seconds

}

DispatchQueue.main.asyncAfter(deadline: DispatchTime.now() + .milliseconds(500)) { () -> Void in

self.player.play()

}

}

The updates from the periodic time observer are handled using sink publisher:

$currentPlayerTime.sink { _ in

DispatchQueue.main.async {

self.updatePath()

}

}

.store(in: &cancelBag)

3. ScaleVideo

Time scaling is performed by the ScaleVideo class on both the video frames and audio samples simultaneously. The ScaleVideo class is largely the same as in ScaleVideo and this discussion focuses on the differences.

The change to the initializer for ScaleVideo was discussed previously: The desiredDuration argument was removed and a new argument integrator has been added.

ScaleVideo is a subclass of the VideoWriter class that performs tasks which setup the AVFoundation methods for reading and writing video and audio sample buffers. The VideoWriter is not an abstract class and can actually be used to read and write a video as a passthrough copy of it, with sample buffers simply read and written as is:

func testVideoWriter() {

let fm = FileManager.default

let docsurl = try! fm.url(for:.documentDirectory, in: .userDomainMask, appropriateFor: nil, create: true)

let destinationPath = docsurl.appendingPathComponent("DefaultVideoCopy.mov").path

let videoWriter = VideoWriter(path: kDefaultURL.path, destination: destinationPath, progress: { p, _ in

print("p = \(p)")

}, completion: { result, error in

print("result = \(String(describing: result))")

})

videoWriter?.start()

}

The ScaleVideo subclass overrides videoReaderSettings and videoWriterSettings since sample buffers for the video frames need to be decompressed in order to adjust their presentation times for time scaling.

ScaleVideo Writers

1. Video writer writeVideoOnQueue

Writes time scaled video frames:

This method generates fixed rate or variable rate video:

Fixed: Frame times are set to their scaled values followed by a resampling method that implements upsampling by repeating frames and downsampling by skipping frames to impose a specific frame rate.

Variable: Frame times are set to their scaled values with no resampling.

2. Audio writer writeAudioOnQueue

Writes time scaled audio samples:

This method is based on the technique developed in the blog ScaleAudio. But rather than scale the whole audio file at once, as is done in ScaleAudio, scaling is implemented in a progressive manner where audio is scaled, as in ScaleVideo, as it is read from the file being scaled.

1. Video writer writeVideoOnQueue

The discussion here focuses on how the ScaleVideo class in ScaleVideo has been modified for variable time scaling.

A new option Any for variable frame rate has been added. When this option is selected the frameRate property is set to 0 and the optional frameDuration is nil.

When frameRate is not 0 frames will be resampled to a specific rate:

if frameRate > 0 { // we are resampling

let scale:Int32 = 600

self.frameDuration = CMTime(value: 1, timescale: CMTimeScale(frameRate)).convertScale(scale, method: CMTimeRoundingMethod.default)

}

The method writeVideoOnQueue is now conditioned on frameDuration to choose which method to execute. writeVideoOnQueueScaled writes video with variable frame rate and writeVideoOnQueueResampled writes video resampled to a specific frame rate.

override func writeVideoOnQueue(_ serialQueue: DispatchQueue) {

if let _ = self.frameDuration {

writeVideoOnQueueResampled(serialQueue)

}

else {

writeVideoOnQueueScaled(serialQueue)

}

}

Variable frame rate is achieved by scaling the time of each frame and using that scaled time as the new presentation time without resampling. See the discussion on rational approximation about how the these scaled times expressed using CMTime incur quantization errors.

Resampling: writeVideoOnQueueResampled

Adjustments for time scaling video frames is made in the method copyNextSampleBufferForResampling.

In ScaleVideo the presentation times of sample buffers were scaled using the timeScaleFactor property:

presentationTimeStamp = CMTimeMultiplyByFloat64(presentationTimeStamp, multiplier: self.timeScaleFactor)

And in ScaleVideo timeScaleFactor was set from the desiredDuration:

self.timeScaleFactor = self.desiredDuration / CMTimeGetSeconds(videoAsset.duration)

Now timeScaleFactor has been removed from ScaleVideo and instead the timeScale method for scaling time is used:

if let presentationTimeStampScaled = self.timeScale(presentationTimeStamp.seconds) {

presentationTimeStamp = CMTimeMakeWithSeconds(presentationTimeStampScaled, preferredTimescale: kPreferredTimeScale)

...

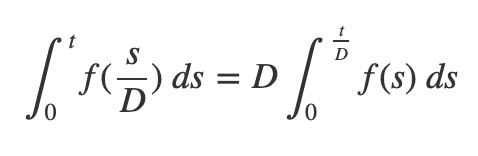

Approximating Floating Point Numbers With Rational Numbers

When seconds, a floating point number Float64, is converted to a CMTime with CMTimeMakeWithSeconds the preferredTimescale is set to kPreferredTimeScale that is a high value of 64000.

This ensures the CMTime, a rational number with kPreferredTimeScale as its denominator, represents the Float64 value with high degree of accuracy. If timescale is too low Xcode log may present a warning:

2022-04-12 23:37:58.287225-0400 ScaleVideo[68292:3560687] CMTimeMakeWithSeconds(0.082 seconds, timescale 24): warning: error of -0.040 introduced due to very low timescale

But more importantly the video will likely be defective.

To minimize the likelihood of this occurring the choice of preferredTimescale needs to be sufficiently high:

Approximating a floating point number X by a quotient of two integers N/D necessarily incurs a possible error of magnitude bounded by 1/D depending on the rounding method:

The larger the choice of D the better the approximation. However, there is a limit on how small preferredTimescale may be. See the discussion in header for CMTimeMakeWithSeconds, where it notes: “If the preferred timescale will cause an overflow, the timescale will be halved repeatedly until the overflow goes away.” The time scaling function should be defined to avoid this type of scaling.

The timeScale method uses the integrator to scale time. The timeScale method is passed the presentation time of the current sample buffer.

The method copyNextSampleBufferForResampling with these adjustments completes time scaling the video frames with resampling:

func copyNextSampleBufferForResampling(lastPercent:CGFloat) -> CGFloat {

self.sampleBuffer = nil

guard let sampleBuffer = self.videoReaderOutput?.copyNextSampleBuffer() else {

return lastPercent

}

self.sampleBuffer = sampleBuffer

if self.videoReaderOutput.outputSettings != nil {

var presentationTimeStamp = CMSampleBufferGetPresentationTimeStamp(sampleBuffer)

if let presentationTimeStampScaled = self.timeScale(presentationTimeStamp.seconds) {

presentationTimeStamp = CMTimeMakeWithSeconds(presentationTimeStampScaled, preferredTimescale: kPreferredTimeScale)

if let adjustedSampleBuffer = sampleBuffer.setTimeStamp(time: presentationTimeStamp) {

self.sampleBufferPresentationTime = presentationTimeStamp

self.sampleBuffer = adjustedSampleBuffer

}

else {

self.sampleBuffer = nil

}

}

else {

self.sampleBuffer = nil

}

}

...

}

Scaling: writeVideoOnQueueScaled

The new method writeVideoOnQueueScaled writes video frames without resampling to produce a variable rate video.

The presentation time of each frame is scaled as in copyNextSampleBufferForResampling:

guard let sampleBuffer = self.videoReaderOutput?.copyNextSampleBuffer() else {

self.finishVideoWriting()

return

}

var presentationTimeStamp = CMSampleBufferGetPresentationTimeStamp(sampleBuffer)

if let presentationTimeStampScaled = self.timeScale(presentationTimeStamp.seconds) {

presentationTimeStamp = CMTimeMakeWithSeconds(presentationTimeStampScaled, preferredTimescale: kPreferredTimeScale)

if let adjustedSampleBuffer = sampleBuffer.setTimeStamp(time: presentationTimeStamp) {

self.sampleBuffer = adjustedSampleBuffer

}

else {

self.sampleBuffer = nil

}

}

else {

self.sampleBuffer = nil

}

guard let sampleBuffer = self.sampleBuffer, self.videoWriterInput.append(sampleBuffer) else {

self.videoReader?.cancelReading()

self.finishVideoWriting()

return

}

After sample buffers are read with copyNextSampleBuffer the new presentation time is set to the scaled presentation time and output with append.

For a variable rate video the scaled video frame times can be compressed so that they are very close in time. Scaled frame times are floating point numbers that will be approximated by CMTime, a rational number. Therefore the error in the approximation should be smaller than the actual distance between the scaled times. Rational approximation explains in more depth.

2. Audio writer writeAudioOnQueue

The discussion here focuses on how the ScaleVideo class in ScaleVideo has been modified to support variable time scaling:

Scaling Audio Variably

- Review interpolation for uniform scaling

- Review computing control points uniformly

- Algorithm for computing control points variably

- ControlBlocks for variably scaling audio

Refer to ScaleAudio for a detailed discussion on how audio is uniformly scaled using linear interpolation with the vDSP methods in the Accelerate framework.

ScaleVideo extended that method of ScaleAudio to video audio in a way that manages memory with a class ControlBlocks to scale audio progressively as the audio buffers are read.

The ControlBlocks approach has been adapted to variable scaling in this project and requires the audio sample rate to calculate audio sample times.

A new ScaleVideo property sampleRate for the sample rate of the video audio is set in the initializer using the AudioStreamBasicDescription. The sample rate is used to synchronize the audio to the time scaled video frames.

var sampleRate:Float64

...

init?(path : String, frameRate: Int32, destination: String, integrator:@escaping (Double) -> Double, progress: @escaping (CGFloat, CIImage?) -> Void, completion: @escaping (URL?, String?) -> Void) {

self.integrator = integrator

if frameRate > 0 { // we are resampling

let scale:Int32 = 600

self.frameDuration = CMTime(value: 1, timescale: CMTimeScale(frameRate)).convertScale(scale, method: CMTimeRoundingMethod.default)

}

super.init(path: path, destination: destination, progress: progress, completion: completion)

self.videoDuration = self.videoAsset.duration.seconds

ciOrientationTransform = videoAsset.ciOrientationTransform()

if let outputSettings = audioReaderSettings(),

let sampleBuffer = self.videoAsset.audioSampleBuffer(outputSettings:outputSettings),

let sampleBufferSourceFormat = CMSampleBufferGetFormatDescription(sampleBuffer),

let audioStreamBasicDescription = sampleBufferSourceFormat.audioStreamBasicDescription

{

outputBufferSize = sampleBuffer.numSamples

channelCount = Int(audioStreamBasicDescription.mChannelsPerFrame)

totalSampleCount = self.videoAsset.audioBufferAndSampleCounts(outputSettings).sampleCount

sourceFormat = sampleBufferSourceFormat

sampleRate = audioStreamBasicDescription.mSampleRate

}

else {

progressFactor = 1

}

}

Review interpolation for uniform scaling.

Back to Scaling Audio Variably

Given an array A of audio samples to scale by factor the length N of the uniformly scaled audio array S is then:

N = factor * A.count

S is computed with linear interpolation of the elements of A with vDSP_vlintD and an array c of N control points.

Each control point c[j] is a Double whose integer part is an index i into the array A of audio samples, and the fractional part f, where 0 <= f < 1, defines how to interpolate A[i] with A[i+1] to produce the scaled array element S[j] with the interpolation formula:

Interpolation Formula

S[j] = A[i]*(1 - f) + A[i+1]*f

This method can be used to scale audio up when factor > 1 or down when factor < 1.

Review computing control points uniformly.

Back to Scaling Audio Variably

Control points for uniform scaling of array A is a ramp of Double values between 0 and N-1, with N given by:

N = factor * A.count

In ScaleVideo an option smoothly was a flag for computing control points either manually with a smoothstep function , or using the vDSP routine vDSP_vgenpD:

let stride = vDSP_Stride(1)

var control:[Double]

if smoothly, length > self.count {

let denominator = Double(length - 1) / Double(self.count - 1)

control = (0...length - 1).map {

let x = Double($0) / denominator

return floor(x) + simd_smoothstep(0, 1, simd_fract(x))

}

}

else {

var base: Double = 0

var end = Double(self.count - 1)

control = [Double](repeating: 0, count: length)

vDSP_vgenD(&base, &end, &control, stride, vDSP_Length(length))

}

For the smoothly option the control points are not in fact uniform.

Algorithm for computing control points variably.

Back to Scaling Audio Variably

Calculation of control points for variable scaling employs the time scale method in ScaleVideo and the sample rate of the audio file being scaled.

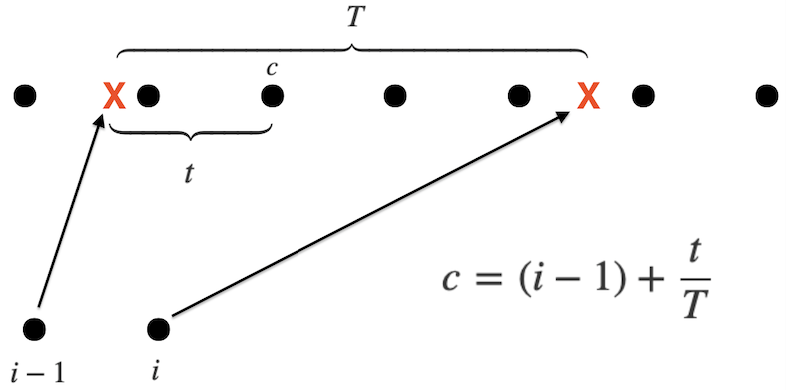

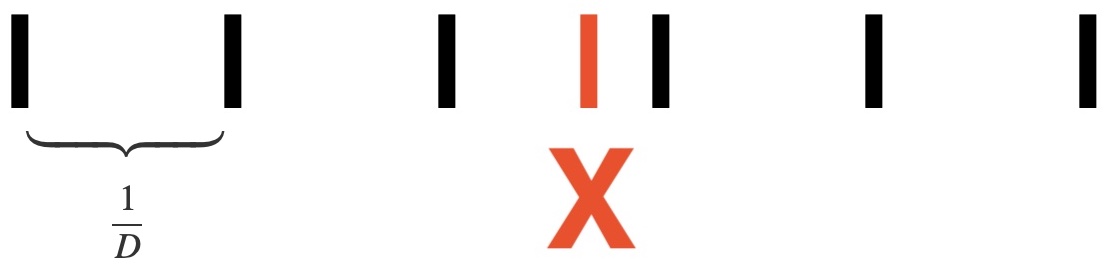

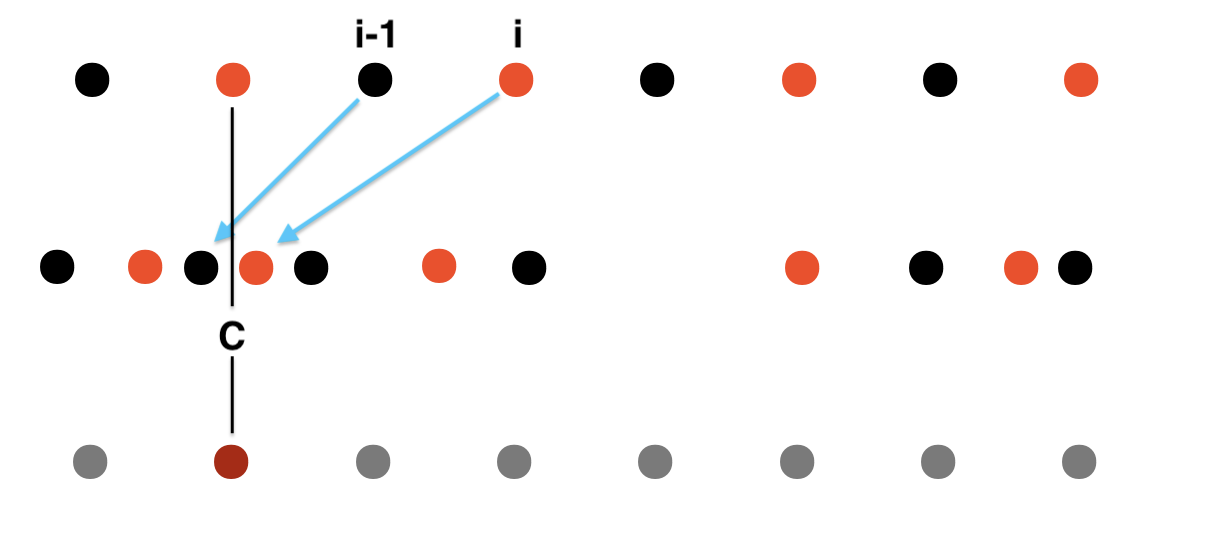

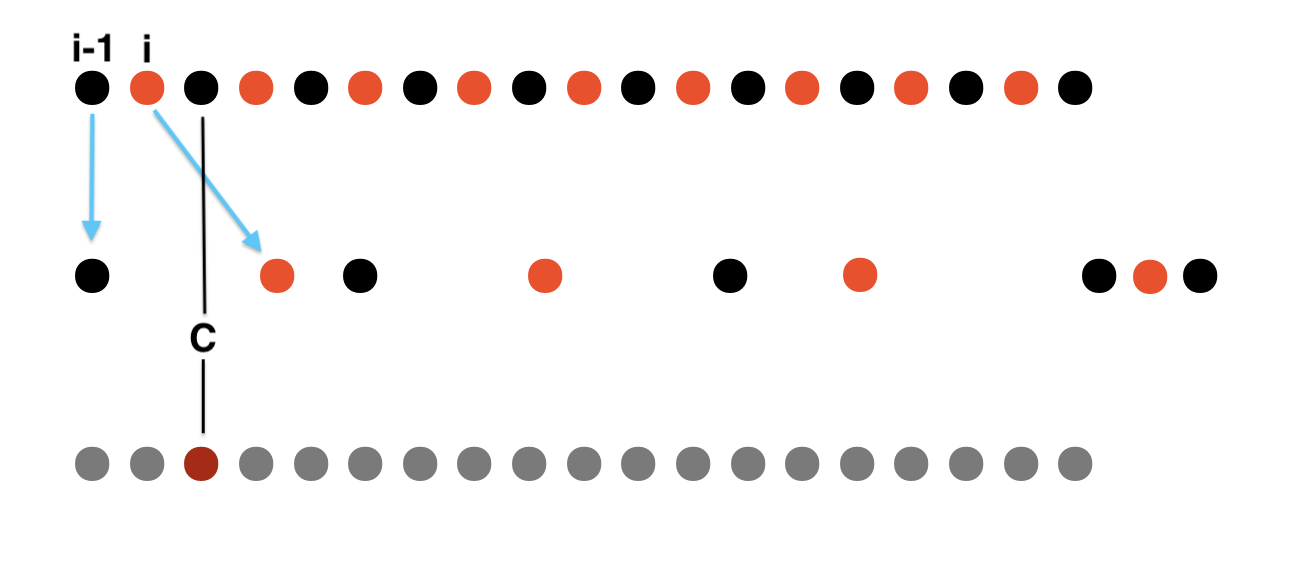

Illustrated in this diagram the scaled audio will have the same sample rate as the audio being scaled suggesting how to proceed:

The bottom row is adjacent audio samples with indices i-1 and i in the timeline of the existing array of audio samples.

Similarly the unknown scaled audio samples are drawn at the top of this diagram at the same sample rate. The sample rate determines the time of each sample in the timeline.

Using the time scale method scaled locations of the pair of audio samples i-1 and i are drawn in the timeline of the scaled audio samples at locations marked with red X’s.

The objective is compute control point c for each of the scaled audio samples that lie between these scaled time locations X.

Since the time of scaled audio samples are known from the sample rate, and the time of the locations X are known, the time differences t and T in the diagram can be determined.

Applying the definition of a control point c as a floating point number whose integer and fractional parts are used by the interpolation formula the expression for c is:

Control Point Formula

c = (i-1) + (t/T)

In the implementation of ControlBlocks below all audio samples are iterated and this formula is applied to create blocks of control points for the linear interpolation.

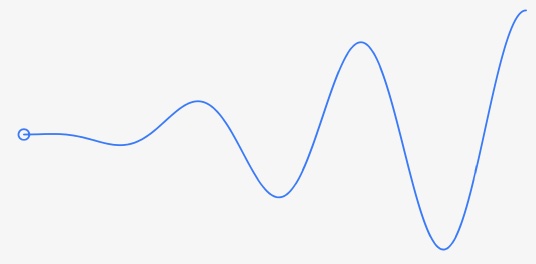

The following diagrams illustrate time scaling both up and down with a correspondence drawn between an interpolated audio sample in the bottom row and the top row of existing audio samples. The vertical black line marked with c, for control point, is used to show that the interpolated audio samples can be aligned with the original audio samples because they share the same sample rate. The black line also intersects the location in the middle row of the actual variably scaled audio samples where the interpolated value for c is located. The color of the scaled sample is a shade of red to suggest it is computed using the interpolation formula.

Scaling down:

Scaling up:

ControlBlocks for variably scaling audio.

Back to Scaling Audio Variably

In ScaleAudio the entire array A of audio samples is scaled at once requiring memory for that array plus memory for the control points.

In ScaleVideo the objective was to reuse the audio scaling method of ScaleAudio but in a progressive manner so it was not required to keep all the audio samples and control points in memory at once.

Audio scaling is performed while the audio samples are read in writeAudioOnQueue using subarrays, or blocks, of the control points as needed.

The ControlBlocks class was introduced for this purpose as virtual mutable array of blocks of control points, with methods first and removeFirst defined to mimic the same methods of an actual mutable array.

This way blocks of control points for linear interpolation are obtained with first and advancement to the next block is signaled with removeFirst. Refer to ScaleVideo for the details of how first and removeFirst are used to read, scale and write audio sample buffers in writeAudioOnQueue.

This project retains ControlBlocks with new implementations of first and removeFirst.

In ScaleVideo each control point c was expressed as a function of its own index n:

func control(n:Int) -> Double { // n in 0...length-1

if length > 1, n == length-1 {

return Double(count-1)

}

if count == 1 || length == 1 {

return 0

}

if smoothly, length > count {

let denominator = Double(length - 1) / Double(count - 1)

let x = Double(n) / denominator

return floor(x) + simd_smoothstep(0, 1, simd_fract(x))

}

return Double(count - 1) * Double(n) / Double(length-1)

}

Control points are now computed in the way described in the algorithm for computing control points variably employing the control point formula.

Three Tasks

For each pair of audio samples the following three tasks need to be performed:

- Compute their scaled time using the time scale method

- Determine which control point times are contained by the time span of those scaled times

- Compute the control points that correspond to those control point times

Control points will not be generated one at a time as in ScaleVideo but rather with an iterative procedure that needs to work closely with the ScaleVideo object for:

- Progress reporting

- Time scale method

- Control block size

- Audio sample rate

To that end the init method of ControlBlocks takes only one argument that provides the reference to ScaleVideo:

init?(scaleVideo:ScaleVideo) {

self.scaleVideo = scaleVideo

let sampleRate = scaleVideo.sampleRate

let count = scaleVideo.totalSampleCount

let size = scaleVideo.outputBufferSize

guard sampleRate > 0, count > 0, size > 0 else {

return nil

}

self.sampleRate = sampleRate

self.count = count

self.size = size

}

count is still the length of the array of all audio samples.

size is still the size of the sample buffers that will contain the scaled audio samples.

sampleRate is the sample rate of the audio. It is used to calculate the audio sample and control point times from their indices as suggested in the algorithm diagram above.

The algorithm for computing control points variably is implemented by the ControlBlocks method fillControls, performing the three tasks listed above, as well as sending progress to ScaleVideo:

func fillControls(range:ClosedRange<Int>) -> Int? {

control.removeAll()

control.append(contentsOf: leftoverControls)

leftoverControls.removeAll()

if audioIndex == 0 {

controlIndex += 1

if (controlIndex >= range.lowerBound && controlIndex <= range.upperBound) {

control.append(0)

}

}

while audioIndex <= count-1 {

if scaleVideo.isCancelled {

return nil

}

audioIndex += 1

if audioIndex % Int(sampleRate) == 0 { // throttle rate of sending progress

let percent = CGFloat(audioIndex)/CGFloat(count-1)

scaleVideo.cumulativeProgress += ((percent - lastPercent) * scaleVideo.progressFactor)

lastPercent = percent

scaleVideo.progressAction(scaleVideo.cumulativeProgress, nil)

print(scaleVideo.cumulativeProgress)

}

let time = Double(audioIndex) / sampleRate

guard let scaledTime = scaleVideo.timeScale(time) else {

return nil

}

if scaledTime > lastScaledTime {

let timeRange = scaledTime - lastScaledTime

controlTime = Double(controlIndex) / sampleRate

while controlTime >= lastScaledTime, controlTime < scaledTime {

let fraction = (controlTime - lastScaledTime) / timeRange

controlIndex += 1

if (controlIndex >= range.lowerBound && controlIndex <= range.upperBound) {

control.append(Double(audioIndex-1) + fraction)

}

else if controlIndex > range.upperBound {

leftoverControls.append(Double(audioIndex-1) + fraction)

}

controlTime = Double(controlIndex) / sampleRate

if scaleVideo.isCancelled {

return nil

}

}

}

lastScaledTime = scaledTime

if controlIndex >= range.upperBound {

break

}

}

return controlIndex

}

fillControls iterates all audio samples as it is called repeatedly in first from writeAudioOnQueue referencing values that are stored outside of the method as properties of ControlBlocks:

var count:Int

var size:Int

var sampleRate:Float64 = 0

var control:[Double] = []

var scaleVideo:ScaleVideo

var currentBlockIndex:Int = 0

var lastScaledTime:Double = 0

var controlTime:Double = 0

var audioIndex:Int = 0

var controlIndex:Int = 0

var leftoverControls:[Double] = []

These properties are:

count= count of audio samplessize= count of scaled audio samples per output audio sample buffersampleRate= sample rate of the audiocontrol= array that holds a block of control pointsscaleVideo= object that performs time scaling and sends progress to usercurrentBlockIndex= first index of the current control blocklastScaledTime= value of the previously scaled time of an audio samplecontrolTime= time of the current control pointaudioIndex= index of the current audio samplecontrolIndex= index of the current control pointleftoverControls= control points that had to be calculated but need to be saved for the next invocation

The method fillControls begins with setup:

control.removeAll()

control.append(contentsOf: leftoverControls)

leftoverControls.removeAll()

The last block of control is cleared and initialized with leftoverControls from previous invocation.

For the current range range:ClosedRange<Int> of control points needed that is passed to fillControls continue to iterate through audio samples:

while audioIndex <= count-1 {

audioIndex += 1

// process audio sample pairs audioIndex-1, audioIndex:

// 1. Compute their scaled time using the time scale method

// 2. Determine which control point times are contained by the time span of those scaled times

// 3. Compute the control points that correspond to those control point times

// exit when the end of the range is reached

if controlIndex >= range.upperBound {

break

}

}

Perform the three tasks for each pair of audio samples until a condition to exit is reached:

1. Compute their scaled time using the time scale method

var lastScaledTime:Double = 0

...

let time = Double(audioIndex) / sampleRate

guard let scaledTime = scaleVideo.timeScale(time) else {

return nil

}

// ...process step 2 : Determine which control point times are contained by the time span of those scaled times...

lastScaledTime = scaledTime

Note that the sampleRate is used to convert the current audio sample index audioIndex to a time.

2. Determine which control point times are contained by the time span of those scaled times

if scaledTime > lastScaledTime {

let timeRange = scaledTime - lastScaledTime

controlTime = Double(controlIndex) / sampleRate

while controlTime >= lastScaledTime, controlTime < scaledTime {

// ...process step 3: Compute the control points that correspond to those control point times...

}

}

Note again that the sampleRate is used to convert the current control point index controlIndex to a time.

3. Compute the control points that correspond to those control point times

let fraction = (controlTime - lastScaledTime) / timeRange

controlIndex += 1

if (controlIndex >= range.lowerBound && controlIndex <= range.upperBound) {

control.append(Double(audioIndex-1) + fraction)

}

else if controlIndex > range.upperBound {

leftoverControls.append(Double(audioIndex-1) + fraction)

}

controlTime = Double(controlIndex) / sampleRate

Note that as the calculations continue for case controlIndex > range.upperBound those values are stored in leftoverControls for next invocation.

fillControls is used by the implementation of first, which returns the current block of control points that have been computed by fillControls and stored into an array property control:

func first() -> [Double]? {

if lastCurrentBlockIndex == currentBlockIndex {

return control

}

lastCurrentBlockIndex = currentBlockIndex

let start = currentBlockIndex

let end = currentBlockIndex + size

guard let _ = fillControls(range: start...end-1) else {

return nil

}

guard control.count > 0 else {

return nil

}

return control

}

As in ScaleVideo the currentBlockIndex property is the start index of the current block of control points in the array of all control points, i.e. the block returned by first.

The method removeFirst advances that index by size, i.e. the size of the sample buffer of scaled audio samples to be written:

func removeFirst() {

currentBlockIndex += size

}

first is called more than once in writewAudioOnQueue before calling removeFirst. So when a new control block is generated lastCurrentBlockIndex stores the value of currentBlockIndex to use as a signal to generate new controls in first. Otherwise first returns the current controls:

func first() -> [Double]? {

if lastCurrentBlockIndex == currentBlockIndex {

return control

}

lastCurrentBlockIndex = currentBlockIndex

// ...return new controls...

}

When it is time to generate a control block a range from currentBlockIndex to currentBlockIndex + size is passed to fillControls:

let start = currentBlockIndex

let end = currentBlockIndex + size

guard let _ = fillControls(range: start...end-1) else {

return nil

}

Conclusion

The ScaleVideo class of ScaleVideo was extended for variably time scaling video and audio using integration to compute time scale factors that vary over time.

The ScaleVideo initializer init:

init?(path : String, frameRate: Int32, destination: String, integrator:@escaping (Double) -> Double, progress: @escaping (CGFloat, CIImage?) -> Void, completion: @escaping (URL?, String?) -> Void)

Arguments:

-

path: String - The path of the video file to be scaled.

-

frameRate: Int32 - The desired frame rate of the scaled video. Specify 0 for variable rate.

-

destination: String - The path of the scaled video file.

-

integrator: Closure - A function that is the definite integral of the instantaneous time scale function on the unit interval [0,1].

-

progress: Closure - A handler that is periodically executed to send progress images and values.

-

completion: Closure - A handler that is executed when the operation has completed to send a message of success or not.

The ScaleVideoApp.swift file contains sample code can be run in the init() method to exercise the method.

The samples generate files into the Documents folder.

Run the app on the Mac and navigate to the Documents folder using the Go to Documents button, or use Go to Folder… from the Go menu in the Finder using the paths to the generated videos that will be printed in the Xcode log view.

The integralTests series of examples uses numerical integration of various instantaneous time scaling functions for the integrator:

// iterate all tests:

let _ = IntegralType.allCases.map({ integralTests(integralType: $0) })

The antiDerivativeTests series of examples uses the antiderivative of various instantaneous time scaling functions for the integrator:

let _ = AntiDerivativeType.allCases.map({ antiDerivativeTests(antiDerivativeType: $0) })

Antiderivative Examples

Since the app code described above is itself an example of using numerical integration to compute time scaling only antiderivative examples are given here.

Three different time scaling functions are defined by their antiderivatives:

enum AntiDerivativeType: CaseIterable {

case constantDoubleRate

case constantHalfRate

case variableRate

}

func antiDerivativeTests(antiDerivativeType:AntiDerivativeType) {

var filename:String

switch antiDerivativeType {

case .constantDoubleRate:

filename = "constantDoubleRate.mov"

case .constantHalfRate:

filename = "constantHalfRate.mov"

case .variableRate:

filename = "variableRate.mov"

}

func antiDerivative(_ t:Double) -> Double {

var value:Double

switch antiDerivativeType {

case .constantDoubleRate:

value = t / 2

case .constantHalfRate:

value = 2 * t

case .variableRate:

value = t * t / 2

}

return value

}

let fm = FileManager.default

let docsurl = try! fm.url(for:.documentDirectory, in: .userDomainMask, appropriateFor: nil, create: true)

let destinationPath = docsurl.appendingPathComponent(filename).path

let scaleVideo = ScaleVideo(path: kDefaultURL.path, frameRate: 30, destination: destinationPath, integrator: antiDerivative, progress: { p, _ in

print("p = \(p)")

}, completion: { result, error in

print("result = \(String(describing: result))")

})

scaleVideo?.start()

}

Run with:

let _ = AntiDerivativeType.allCases.map({ antiDerivativeTests(antiDerivativeType: $0) })

Example 1

The integrator is the antiderivative:

s(t) = t/2

The instantaneous time scaling function is:

s`(t) = 1/2

In terms of integrals:

∫ s’(t) dt = ∫ 1/2 dt = t/2

So time is locally scaled by 1/2 uniformly and the resulting video constantDoubleRate.mov plays uniformly at double the original rate.

Example 2

The integrator is the antiderivative:

s(t) = 2 t

The instantaneous time scaling function is:

s`(t) = 2

In terms of integrals:

∫ s’(t) dt = ∫ 2 dt = 2 t

So time is locally scaled by 2 uniformly and the resulting scaled video constantHalfRate.mov plays uniformly at half the original rate.

Example 3

The integrator is the antiderivative:

s(t) = s(t) = t^2/2

The instantaneous time scaling function is:

s’(t) = t

In terms of integrals:

∫ s’(t) dt = ∫ t dt = t^2/2

So time is locally scaled at a variable rate t and the resulting scaled video variableRate.mov plays at a variable rate that starts fast and slows to end at normal speed.