PlotAudio

Draws audio waveforms

Originally published • Last updatedLearn how to create a visual representation of audio by plotting its samples as decibels.

PlotAudio

The associated Xcode project implements a SwiftUI app for macOS and iOS that processes audio samples in a file to plot their decibel values over time.

The subfolder PlotAudio contains a small set of independent files that prepare and display audio data in a specialized interactive view. The remaining files build an app around that functionality.

A list of audio and video files for processing is included in the bundle resources subdirectories Audio Files and Video Files.

Add your own audio and video files or use the sample set provided.

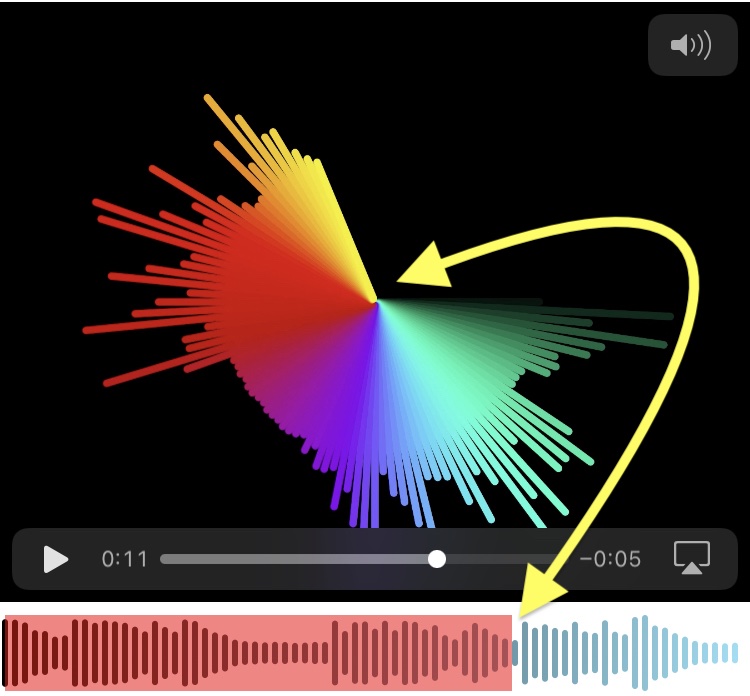

Tap on a file in the list to plot its audio.

Adjust controls for appearance and drag or tap on the audio plot to set play locations.

PlotAudio Index

Introduction

In physics sound is the mechanical vibrations of air that can induce electric signals in devices such as a microphone. The voltage of the electric signal can then be quantized into numbers, called amplitudes, by sampling over time with an analog-to-digital converter and stored as an array of sample values in a digital audio or video media file.

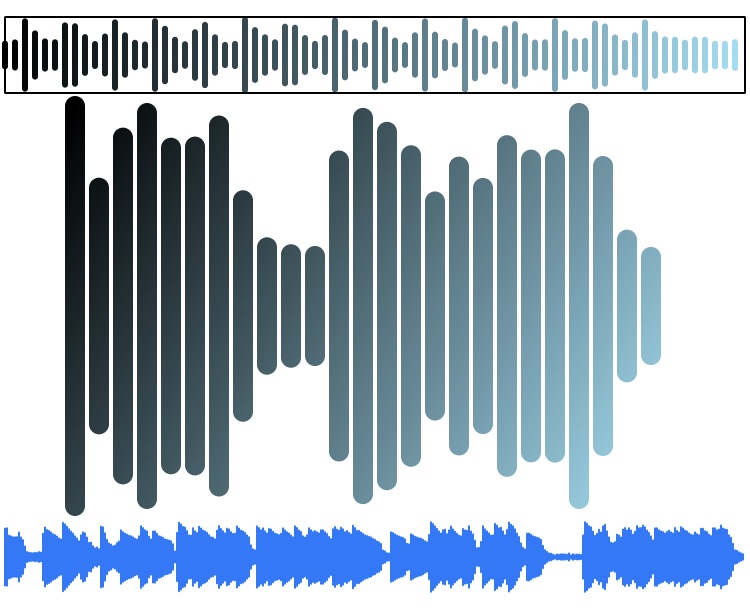

PlotAudio reads the sample values stored in media files, converts them to decibels, and then subsamples over the time domain to plot them in a view as vertical bars, with height proportional to decibel value. An indicator overlay that responds to dragging and tapping on the view is added to visually identify playback time.

Plotting the raw digital sample values does not work well due to the high sample rate and quantization level required for high quality reproduction.

The high sample rate required for audio is due to the classical Nyquist–Shannon sampling theorem which says that a band-limited signal with maximum frequency B can be exactly reconstructed from samples if the sample rate R is twice the maximum frequency B, or R = 2B. Otherwise aliasing occurs, and the reconstructed signal misrepresents the original. Consequently for your hearing range up to 20,000 cycles per second (20kHz) a sample rate greater than 2 x 20,000 = 40,000 samples per second is required. In practice, the sample rate should be chosen strictly greater than twice the maximum frequency B, or R > 2B.

At the standard sampling rate of 44,100 samples per second, and 16-bit quantization, the sample count and amplitude range of even short duration audio far exceeds the pixel dimensions of the display view. A good graphical representation of the sound from its samples requires signal processing in the range and domain dimensions to fit in the view. This dual dimension task can be accomplished with the help of two signal processing functions in the Accelerate framework :

Range: vDSP.convert for decibels

To fit both very small and very large amplitudes vertically in the same view, and preserve contrast, use vDSP.convert to convert the range of sample values to decibels.

Domain: vDSP_desampD for downsample

To fit the many amplitudes over time horizontally in the view use vDSP_desampD (or vDSP.downsample) to downsample to a desired size that takes into account the view width, bar width and spacing. An optional anti-aliasing averaging filter is applied to smooth the values.

(Note: The vDSP.downsample version of vDSP_desamp has been broken but seems to be fixed for upcoming iOS 17)

Decibels

Decibel conversion reduces the wide range of audio sample values to a size that can be plotted in a view whose pixel height is much smaller. The PlotAudio Function section discusses how these decibel values are drawn into a SwiftUI view.

Decibels are basically the base10 logarithm of the ratio of the absolute value of actual sample values to a single reference value. The reference value, called the zero reference, is chosen as the maximum possible value of all samples, which means decibels are non-positive numbers in the range [-∞, 0], since the ratio is ≤ 1. To avoid -∞ values are clipped to very low amplitude, called noise. See the Log Graph of Decibels section for more.

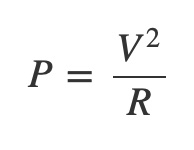

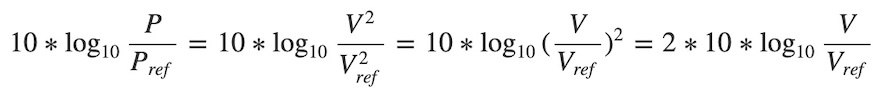

Decibels can be calculated using either power or voltage (root-power) quantities. This difference is due to the relation between power P, voltage V, and resistance R for electric signals:

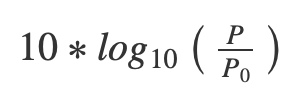

For power values P the formula for decibel is:

P0, also written Pref, is called the reference value of the quantity. A decibel is a measure of how much smaller or larger a value is to another fixed nonzero value on a logarithmic scale.

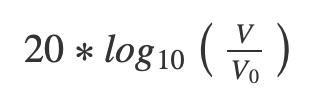

For root-power values V the formula for decibel is:

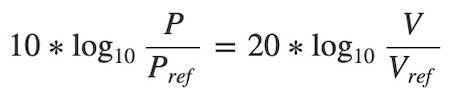

The power and root-power formula for decibel are equivalent:

To show this substitute the power voltage relation V2/R into the decibel formula for power, and use the exponent rule of logarithm, log(ab) = b log(a):

The distinction for this application is that audio samples are a root-power voltage quantity. The power version produces plots with less contrast.

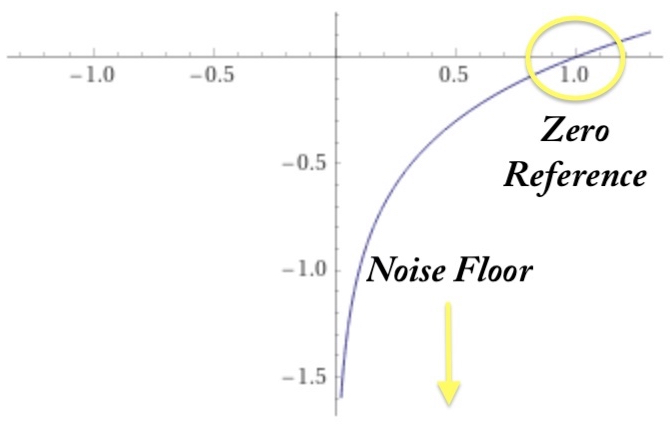

Log Graph of Decibels

Decibel values are the ratio of the absolute values of raw audio samples S to their maximum possible value Smax on a logarithmic scale using log10:

20 * log10(S/Smax)

Note that S/Smax ≤ 1.

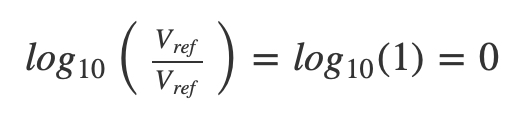

The value Smax is called the zero reference, as the decibel value of this value is 0:

log10(Smax/Smax) = log10(1) = 0

The graph of log10 on the half open interval (0,1] with value corresponding to the zero reference highlighted:

While audio sample values are generally signed numbers (+,-) decibels are non-positive numbers.

Since log10(0) = -∞, the values need to be clipped to what is called noise floor, a small but nonzero value. The default value is -70.

The audio reader in this app requests Int16 audio sample values, and the decibel value of the smallest nonzero positive value of Int16, namely 1, is about -90:

print(20 * log10(1.0/Double(Int16.max)))

// -90.30873362283398

The app view includes a segmented control named Noise Floor to select different options for clipping decibels.

The PlotAudio Function section discusses how these decibel values are drawn into a SwiftUI view.

Decibel Example.

The function DecibelExample() in PlotAudioApp.swift confirms that the amplitude version of vDSP.convert uses the decibel formula for root-power values by comparing results on small array with a direct computation:

func DecibelExample() {

var bufferSamplesD:[Double] = [1.0, 3.0, 5.0]

vDSP.convert(amplitude: bufferSamplesD, toDecibels: &bufferSamplesD, zeroReference: 5.0)

print("vDSP.convert = \(bufferSamplesD)")

// = [-13.979400086720375, -4.436974992327126, 0.0]

// compare

print("20 * log10 = \([20 * log10(1.0/5.0), 20 * log10(3.0/5.0), 20 * log10(5.0/5.0)])")

// = [-13.979400086720375, -4.436974992327128, 0.0]

}

Downsampling

Downsampling will reduce the large number of audio samples, as decibels, to a subset of a desired size that can fit in the width of the plot view.

The desired size is determined by the width and spacing of the bars of the plot, as well as the width of the view. Each element of the subset is an anti-aliasing average of the samples to smooth the result, where the number of samples averaged is the ratio of original size to the desired size, with the remainder ignored. This ratio is called the decimation factor.

Downsampling can be a memory intensive task because of the large number of audio samples. Two contrasting methods for downsampling are implemented to illustrate how memory can be reduced.

The first method requires the most memory and downsamples the whole array of audio samples at once. The second method is progressive, and processes blocks of much smaller sample count in succession, as data is read, to reduce memory. As samples are read from the file they are accumulated into a block until its size exceeds a threshold. The threshold is determined by the sample rate of the audio and the number of seconds of audio to process per block.

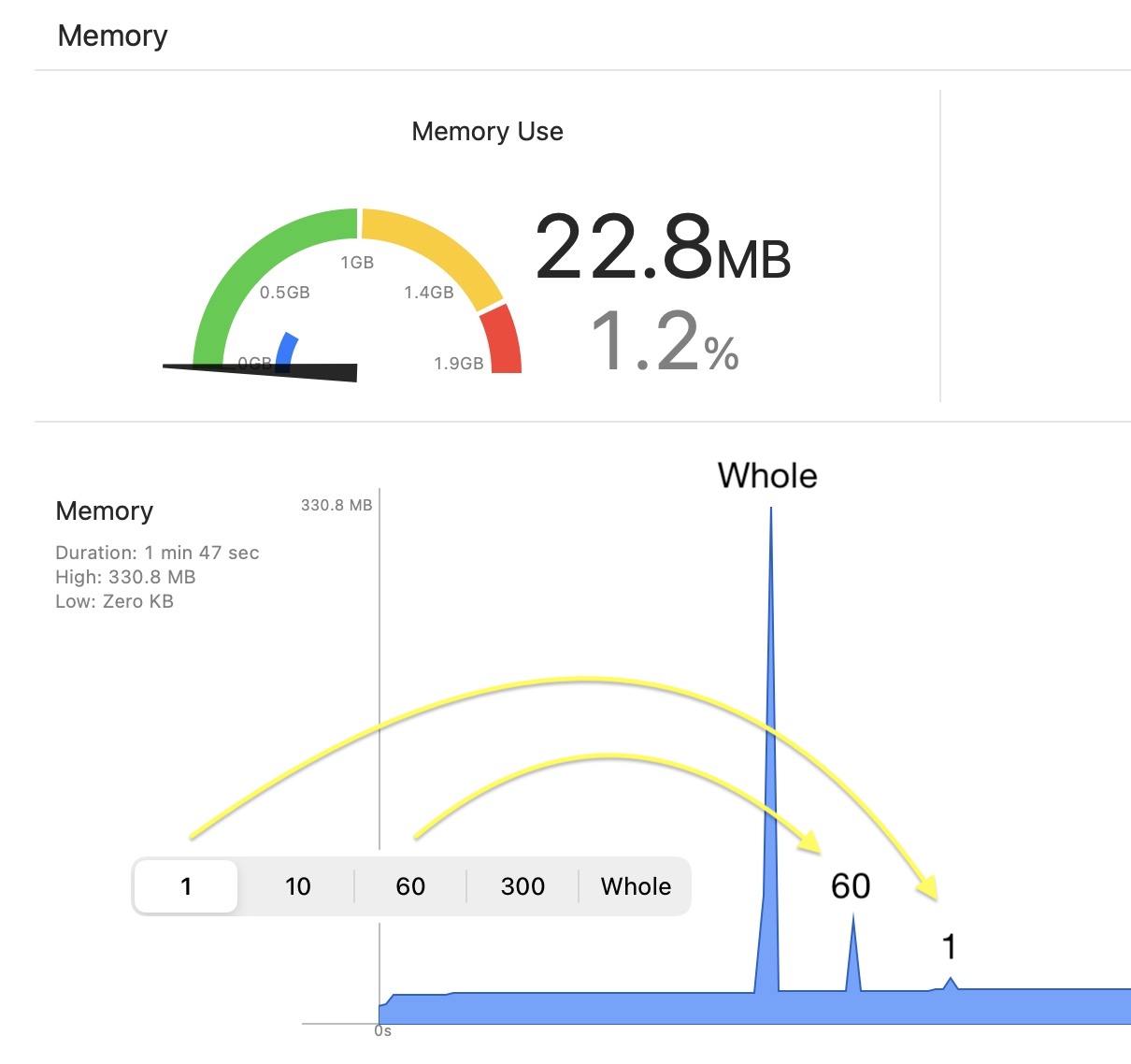

The control view includes a segmented control named Downsample Rate to choose the whole or progressive approach by picking the option Whole or the number of seconds to process per block. Memory required for the progressive method is uniformly bounded, while the memory required by the whole method is directly proportional to the duration of the audio.

Inspect the memory view of Xcode while the app processes audio to see how it is affected by the choices:

The pseudocode for vDSP_desamp is:

for (n = 0; n < N; ++n)

{

sum = 0;

for (p = 0; p < P; ++p)

sum += A[n + p] * F[p];

C[n] = sum;

}

Where:

A - array to be downsampled

C - array to hold downsamples

N - desired size of the downsampled array

P - decimation factor

F - filter array

The desired size of the downsampled array N is given by a function of the bar width and spacing, and the view size width:

func downsampleCount() -> Int {

return Int(self.frameSize.width / Double((self.barSpacing + self.barWidth)))

}

The decimation factor P is determined by the audio’s sample count, totalSampleCount, and the desired size:

let decimationFactor = totalSampleCount / downsampleCount()

The totalSampleCount can be determined exactly using AVAssetReader to read and count the audio samples, or approximated from the audio sample rate and duration.

Finally the filter F is an anti-aliasing average over decimationFactor samples:

let filter = [Double](repeating: 1.0 / Double(decimationFactor), count:decimationFactor)

The downsample process then steps through the array of audio samples by decimationFactor step sizes, forming the average of next decimationFactor elements.

For progressive downsampling, each block is downsampled with leftovers set aside for the next block. A block may have unprocessed leftovers since in general the length of the current block is not exactly divisible by the decimationFactor, but has a remainder. The leftovers, saved in an array named end, are added as a prefix to the next block.

Downsample Example.

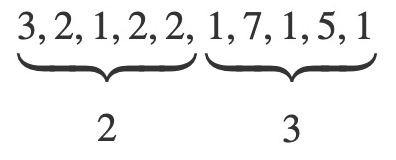

For example if there are 11 samples, and the desired size is 2, then every 5 samples will be averaged, since 11⁄2 = 5, with a remainder of 1.

Specifically an array [3,2,1,2,2,1,7,1,5,1,4] is downsampled to [2,3]:

The last element 4 had to be dropped, there are not enough samples.

The following code uses vDSP_desampD, which can be run in an Xcode playground, verifies the result:

import Accelerate

let audioSamplesD:[Double] = [3,2,1,2,2,1,7,1,5,1,4]

let desiredSize = 2

let decimationFactor:Int = audioSamplesD.count / desiredSize

let filter = [Double](repeating: 1.0 / Double(decimationFactor), count:decimationFactor)

print("filter = \(filter)") // [0.2, 0.2, 0.2, 0.2, 0.2]

var downsamples = [Double](repeating: 0.0, count:desiredSize)

vDSP_desampD(audioSamplesD, vDSP_Stride(decimationFactor), filter, &downsamples, vDSP_Length(desiredSize), vDSP_Length(decimationFactor))

print(downsamples) // [2.0, 3.0]

Progressive Downsample Example.

The function DecimationExample() in PlotAudioApp.swift demonstrates the progressive method of downsampling on a small array. In the init() method of PlotAudioApp run it to downsample [3,2,1,2,2,1,7] to a size of 2 elements.

DecimationExample(audioSamples:[3,2,1,2,2,1,7], desiredSize:2)

A function downsample is defined that downsamples an array of Int16 using the input decimationFactor, but only if the decimationFactor does not exceed the size of the array. This function mimics the actual function by the same name used in PlotAudio.swift, but without converting to decibels.

func downsample(audioSamples:[Int16], decimationFactor:Int) -> [Double]? {

guard decimationFactor <= audioSamples.count else {

print("downsample, error : decimationFactor \(decimationFactor) > audioSamples.count \(audioSamples.count)")

return nil

}

print("downsample, array: \(audioSamples)")

var audioSamplesD = [Double](repeating: 0, count: audioSamples.count)

vDSP.convertElements(of: audioSamples, to: &audioSamplesD)

let filter = [Double](repeating: 1.0 / Double(decimationFactor), count:decimationFactor)

let downsamplesLength = audioSamplesD.count / decimationFactor

var downsamples = [Double](repeating: 0.0, count:downsamplesLength)

vDSP_desampD(audioSamplesD, vDSP_Stride(decimationFactor), filter, &downsamples, vDSP_Length(downsamplesLength), vDSP_Length(decimationFactor))

print("downsample, result: \(downsamples)")

return downsamples

}

Rather than reading blocks of audio samples from a file using AVAssetReader, as in the PlotAudio method readAndDownsampleAudioSamples, this example iterates an array, one element at a time.

In readAndDownsampleAudioSamples samples are read as CMSampleBuffer with copyNextSampleBuffer, which can contains thousands of audio samples, and appended to a block, until the block size exceeds a threshold. For audio the block threshold is calculated as:

let audioSampleSizeThreshold = Int(audioSampleRate) * downsampleRateSeconds

The audioSampleRate is a property of the audio data and has a typical value of 44,100. The downsampleRateSeconds is a value of your choice, and set by the Downsample Rate control in this application.

The blocks in this example are accumulated element by element of the input array until the hardcoded value blockSizeThreshold is exceeded. Then the block is decimated with downsample, with unused elements in the remainder saved for the next iteration. The downsamples are accumulated into an array named downsamples_blocks, to be printed at the end.

The console output contrasts the whole and progressive methods, and prints out each of the blocks and corresponding remainders, if any, that are used in the next iteration.

The method begins by computing the decimation factor, Int(7⁄2) = 3, and printing it along with the input array:

let decimationFactor = audioSamples.count / desiredSize

audioSamples [3, 2, 1, 2, 2, 1, 7] audioSamples.count = 7 desiredSize = 2 decimationFactor = 3

First the whole array is downsampled to [1.9999999999999998, 1.6666666666666665] with downsample:

var downsamples_whole:[Double] = []

// downsample whole at once

if let downsamples = downsample(audioSamples: audioSamples, decimationFactor: decimationFactor) {

downsamples_whole.append(contentsOf: downsamples)

}

Result:

WHOLE: downsample, array: [3, 2, 1, 2, 2, 1, 7] decimationFactor 3 downsample, result: [1.9999999999999998, 1.6666666666666665]

Next the progressive approach is used, and as blocks are downsampled the results are appended to the array downsamples_blocks.

Read samples one at a time from the input array into a block until the threshold blockSizeThreshold, hardcoded to 3, is exceeded:

var downsamples_blocks:[Double] = []

var block:[Int16] = []

let blockSizeThreshold = 3

var blockCount:Int = 0

// read a sample at a time into a block

for sample in audioSamples {

block.append(sample)

// process clock when threshold exceeded

if block.count > blockSizeThreshold {

blockCount += 1

print("block \(blockCount) = \(block)")

// downsample block

if let blockDownsampled = downsample(audioSamples: block, decimationFactor: decimationFactor) {

downsamples_blocks.append(contentsOf: blockDownsampled)

}

// calculate number of leftover samples not processed in decimation

let remainder = block.count.quotientAndRemainder(dividingBy: decimationFactor).remainder

// save leftover

var end:[Int16] = []

if block.count-1 >= block.count-remainder {

end = Array(block[block.count-remainder...block.count-1])

}

print("end = \(end)\n")

block.removeAll()

// restore leftover

block.append(contentsOf: end)

}

}

// process anything left

if block.count > 0 {

print("block (final) = \(block)")

if let blockDownsampled = downsample(audioSamples: block, decimationFactor: decimationFactor) {

downsamples_blocks.append(contentsOf: blockDownsampled)

print("final block downsamples = \(blockDownsampled)\n")

}

}

print("downsamples:\(downsamples_whole) (whole)")

print("downsamples:\(downsamples_blocks) (blocks)")

Downsample block 1, with result [1.9999999999999998].

First block result:

PROGRESSIVE: block 1 = [3, 2, 1, 2] downsample, array: [3, 2, 1, 2] decimationFactor 3 downsample, result: [1.9999999999999998] end = [2]

The downsample result, [1.9999999999999998], is appended to downsamples_blocks.

Since the decimation factor is 3 there was a remainder of 1 element, [2]. This remaining element is prefixed to the next block 2. Samples continue to be read from the input until the threshold is exceeded again, and block 2 is downsampled, with result [1.6666666666666665]:

block 2 = [2, 2, 1, 7] downsample, array: [2, 2, 1, 7] decimationFactor 3 downsample, result: [1.6666666666666665] end = [7]

The downsample result, [1.6666666666666665], is appended to downsamples_blocks, which is now [1.9999999999999998, 1.6666666666666665].

Again there is a remainder element of [7], but all samples have been read and the final block is only [7], the downsample error is reported at the end due to not enough samples. The progressive process is complete with the same final result [1.9999999999999998, 1.6666666666666665]:

block (final) = [7] downsample, error : decimationFactor 3 > audioSamples.count 1 downsamples:[1.9999999999999998, 1.6666666666666665] (whole) downsamples:[1.9999999999999998, 1.6666666666666665] (blocks)

PlotAudio Classes

The subfolder PlotAudio contains the core files that implement preparing and plotting audio data in a view:

- DownsampleAudio : The AVFoundation, Accelerate and SwiftUI code that processes audio samples for plotting as a Path with the PlotAudio Function.

- PlotAudioWaveformView : The View that draws the plot of the processed audio samples.

- PlotAudioObservable : The ObservedObject of

PlotAudioWaveformViewwhose properties control the appearance of the audio plot, and calls methods of its PlotAudioDelegate.

The PlotAudio folder can be added to other projects to display audio plots of media URL or AVAsset. The Xcode preview of PlotAudioWaveformView is setup to display the audio plots of three included audio files.

DownsampleAudio

The AVFoundation, Accelerate and SwiftUI code that processes audio samples for plotting as a Path with the PlotAudio Function.

This class has two properties, provided in the init:

-

noiseFloor: Decibels are clipped to this value. Defaults to -70. -

antialias: Apply an averaging filter. Defaults to true.

After creating the class generate the audio samples by calling the run method passing the following arguments:

-

asset: TheAVAssetcan be audio or video content. For example it can be a URL based asset, or a derivative asset that is an AVMutableComposition to process a range of an asset. -

downsampleCount: The desired number of audio samples to plot. This value will be determined by the width of the plot view in conjunction with the desired line width and spacing of thePathline elements. -

downsampleRateSeconds: The rate to process the audio progressively in blocks to limit the amount of memory required.

An instance of this class is used by the PlotAudioObservable method downsampleAudioToPlot for extracting samples from an AVAsset:

func downsampleAudioToPlot(asset:AVAsset, downsampleRateSeconds:Int?) {

isPlotting = true

audioDuration = asset.duration.seconds

downsampleAudio = DownsampleAudio(noiseFloor: Double(self.noiseFloor), antialias: pathAntialias)

downsampleAudio?.run(asset: asset, downsampleCount: self.downsampleCount(), downsampleRateSeconds: downsampleRateSeconds) { audioSamples in

DispatchQueue.main.async { [weak self] in

self?.audioSamples = audioSamples

self?.isPlotting = false

self?.plotAudioDelegate?.plotAudioDidFinishPlotting()

}

}

}

In the completion plotAudioDidFinishPlotting notifies delegates conforming to PlotAudioDelegate when the downsamples have been prepared.

The function PlotAudio maps the decibel values into a frame whose size is frameSize:

func PlotAudio(audioSamples:[Double], frameSize:CGSize, noiseFloor:Double) -> Path?

The associated function PlotAudio creates a Path for audio samples that have been extracted, decibel converted and downsampled from an audio or video AVAsset using the DownsampleAudio class.

The PlotAudioObservable method updatePaths calls the function PlotAudio when an update is required to the plot, such as when parameters of the plot are changed by the controls:

func updatePaths() {

if let audioSamples = self.audioSamples {

if let audioPath = PlotAudio(audioSamples, frameSize: frameSize, noiseFloor: Double(self.noiseFloor)) {

self.audioPath = audioPath

}

}

}

Memory Usage

The array of audio samples can be very large requiring a lot of memory. Instead of processing the whole array at once it can be processed as a sequence of blocks of smaller size. As audio is read the samples can be appended to a block until its size exceeds a certain threshold, named audioSampleSizeThreshold, and then downsampled.

Since audio has an associated sample rate audioSampleRate, that specifies how many samples there are per second of audio, it makes sense to specify the threshold as a function of time in seconds, downsampleRateSeconds:

let audioSampleSizeThreshold = Int(audioSampleRate) * downsampleRateSeconds

A control is provided for varying the value of downsampleRateSeconds in order to compare the different outcomes in the memory view of Xcode.

Moreover, the run method is performed on a serial queue downsampleAudioQueue with a semaphore named running to ensure only one runs at a time:

let downsampleAudioQueue: DispatchQueue = DispatchQueue(label: "com.limit-point.downsampleAudioQueue", autoreleaseFrequency: DispatchQueue.AutoreleaseFrequency.workItem)

func run(asset:AVAsset, downsampleCount:Int, downsampleRateSeconds:Int?, completion: @escaping ([Double]?) -> ()) {

downsampleAudioQueue.async { [weak self] in

guard let self = self else {

return

}

if let downsampleRateSeconds = downsampleRateSeconds {

self.readAndDownsampleAudioSamples(asset: asset, downsampleCount: downsampleCount, downsampleRateSeconds: downsampleRateSeconds) { audioSamples in

print("audio downsampled progressively")

self.running.signal()

completion(audioSamples)

}

}

else {

self.readAndDownsampleAudioSamples(asset: asset, downsampleCount: downsampleCount) { audioSamples in

print("audio downsampled")

self.running.signal()

completion(audioSamples)

}

}

self.running.wait()

}

}

Read Audio

The method readAndDownsampleAudioSamples has two versions. One version processes the whole array of audio samples at once, and the other implements a progressive method using a sequence of smaller blocks.

Consider the version of readAndDownsampleAudioSamples that processes in blocks:

func readAndDownsampleAudioSamples(asset:AVAsset, downsampleCount:Int, downsampleRateSeconds:Int, completion: @escaping ([Double]?) -> ()) {

let (_, reader, readerOutput) = self.audioReader(asset:asset, outputSettings: kPAAudioReaderSettings)

guard let audioReader = reader,

let audioReaderOutput = readerOutput

else {

return completion(nil)

}

if audioReader.canAdd(audioReaderOutput) {

audioReader.add(audioReaderOutput)

}

else {

return completion(nil)

}

guard let sampleBuffer = asset.audioSampleBuffer(outputSettings:kPAAudioReaderSettings),

let sampleBufferSourceFormat = CMSampleBufferGetFormatDescription(sampleBuffer),

let audioStreamBasicDescription = sampleBufferSourceFormat.audioStreamBasicDescription else {

return completion(nil)

}

let totalSampleCount = asset.audioBufferAndSampleCounts(kPAAudioReaderSettings).sampleCount

let audioSampleRate = audioStreamBasicDescription.mSampleRate

guard downsampleCount <= totalSampleCount else {

return completion(nil)

}

let decimationFactor = totalSampleCount / downsampleCount

var downsamples:[Double] = []

var audioSamples:[Int16] = []

let audioSampleSizeThreshold = Int(audioSampleRate) * downsampleRateSeconds

if audioReader.startReading() {

while audioReader.status == .reading {

autoreleasepool { [weak self] in

if let sampleBuffer = audioReaderOutput.copyNextSampleBuffer(), let bufferSamples = self?.extractSamples(sampleBuffer) {

audioSamples.append(contentsOf: bufferSamples)

if audioSamples.count > audioSampleSizeThreshold {

if let audioSamplesDownsamples = downsample(audioSamples, decimationFactor: decimationFactor) {

downsamples.append(contentsOf: audioSamplesDownsamples)

}

// calculate number of leftover samples not processed in decimation

let remainder = audioSamples.count.quotientAndRemainder(dividingBy: decimationFactor).remainder

// save leftover

var end:[Int16] = []

if audioSamples.count-1 >= audioSamples.count-remainder {

end = Array(audioSamples[audioSamples.count-remainder...audioSamples.count-1])

}

audioSamples.removeAll()

// restore leftover

audioSamples.append(contentsOf: end)

}

}

else {

audioReader.cancelReading()

}

}

}

if audioSamples.count > 0 {

if let audioSamplesDownsamples = downsample(audioSamples, decimationFactor: decimationFactor) {

downsamples.append(contentsOf: audioSamplesDownsamples)

}

}

}

completion(downsamples)

}

First the method sets up the AVAssetReader:

let (_, reader, readerOutput) = self.audioReader(asset:asset, outputSettings: kPAAudioReaderSettings)

guard let audioReader = reader,

let audioReaderOutput = readerOutput

else {

return completion(nil)

}

if audioReader.canAdd(audioReaderOutput) {

audioReader.add(audioReaderOutput)

}

else {

return completion(nil)

}

With the function audioReader defined as:

func audioReader(asset:AVAsset, outputSettings: [String : Any]?) -> (audioTrack:AVAssetTrack?, audioReader:AVAssetReader?, audioReaderOutput:AVAssetReaderTrackOutput?) {

if let audioTrack = asset.tracks(withMediaType: .audio).first {

if let audioReader = try? AVAssetReader(asset: asset) {

let audioReaderOutput = AVAssetReaderTrackOutput(track: audioTrack, outputSettings: outputSettings)

return (audioTrack, audioReader, audioReaderOutput)

}

}

return (nil, nil, nil)

}

The value of kPAAudioReaderSettings of the outputSettings passed to AVAssetReaderTrackOutput is:

let kPAAudioReaderSettings = [

AVFormatIDKey: Int(kAudioFormatLinearPCM) as AnyObject,

AVLinearPCMBitDepthKey: 16 as AnyObject,

AVLinearPCMIsBigEndianKey: false as AnyObject,

AVLinearPCMIsFloatKey: false as AnyObject,

AVNumberOfChannelsKey: 1 as AnyObject,

AVLinearPCMIsNonInterleaved: false as AnyObject]

The audio reader settings keys are requesting samples to be returned with the following noteworthy specifications, so values returned are Int16:

- Format as ‘Linear PCM’, i.e. uncompressed samples (

AVFormatIDKey) - 16 bit integers,

Int16(AVLinearPCMBitDepthKey)

By including the key AVNumberOfChannelsKey set to 1 the audio reader will read all channels merged into one. By not including the key all channels would be returned interleaved, and each channel could then be processed separately. See Scaling Audio Files, where channels are processed separately to scale audio while preserving channel layout.

A CMSampleBuffer is read to determine the audioSampleRate for the block threshold audioSampleSizeThreshold. Then the total number of audio samples is counted for totalSampleCount to determine the decimationFactor for decimating the audio samples with Accelerate method vDSP_desampD:

guard let sampleBuffer = asset.audioSampleBuffer(outputSettings:kPAAudioReaderSettings),

let sampleBufferSourceFormat = CMSampleBufferGetFormatDescription(sampleBuffer),

let audioStreamBasicDescription = sampleBufferSourceFormat.audioStreamBasicDescription else {

return completion(nil)

}

let totalSampleCount = asset.audioBufferAndSampleCounts(kPAAudioReaderSettings).sampleCount

let audioSampleRate = audioStreamBasicDescription.mSampleRate

guard downsampleCount <= totalSampleCount else {

return completion(nil)

}

let decimationFactor = totalSampleCount / downsampleCount

var downsamples:[Double] = []

var audioSamples:[Int16] = []

let audioSampleSizeThreshold = Int(audioSampleRate) * downsampleRateSeconds

The audioSampleSizeThreshold is used to manage progressive processing:

if let sampleBuffer = audioReaderOutput.copyNextSampleBuffer(), let bufferSamples = self.extractSamples(sampleBuffer) {

audioSamples.append(contentsOf: bufferSamples)

if audioSamples.count > audioSampleSizeThreshold {

if let audioSamplesDownsamples = downsample(audioSamples, decimationFactor: decimationFactor) {

downsamples.append(contentsOf: audioSamplesDownsamples)

}

// calculate number of leftover samples not processed in decimation

let remainder = audioSamples.count.quotientAndRemainder(dividingBy: decimationFactor).remainder

// save leftover

var end:[Int16] = []

if audioSamples.count-1 >= audioSamples.count-remainder {

end = Array(audioSamples[audioSamples.count-remainder...audioSamples.count-1])

}

audioSamples.removeAll()

// restore leftover

audioSamples.append(contentsOf: end)

}

}

else {

audioReader.cancelReading()

}

While sample buffers are read with copyNextSampleBuffer and samples extracted, they are accumulated into an array audioSamples until the threshold is reached:

let sampleBuffer = audioReaderOutput.copyNextSampleBuffer(), let bufferSamples = self.extractSamples(sampleBuffer)

audioSamples.append(contentsOf: bufferSamples)

if audioSamples.count > audioSampleSizeThreshold {

...

}

Once the threshold is reached the accumulated samples are downsampled with downsample, discussed in next section Process Audio:

if let audioSamplesDownsamples = downsample(audioSamples, decimationFactor: decimationFactor) {

downsamples.append(contentsOf: audioSamplesDownsamples)

}

The processed samples are accumulated in the array downsamples using append.

The number of samples in audioSamples actually processed depends on the decimationFactor, according to the algorithm in the pseudocode at the description of the Accelerate method vDSP_desampD. Only if audioSamples.count is divisible by the decimationFactor will all samples be processed. The unprocessed samples are saved in an array called end:

// calculate number of leftover samples not processed in decimation

let remainder = audioSamples.count.quotientAndRemainder(dividingBy: decimationFactor).remainder

// save leftover

var end:[Int16] = []

if audioSamples.count-1 >= audioSamples.count-remainder {

end = Array(audioSamples[audioSamples.count-remainder...audioSamples.count-1])

}

Then clear the array of samples and append the saved end:

audioSamples.removeAll()

// restore leftover

audioSamples.append(contentsOf: end)

Continue reading sample buffers and processing in the manner described above until the end of samples buffers is reached.

Finally process any remaining samples:

if audioSamples.count > 0 {

if let audioSamplesDownsamples = downsample(audioSamples, decimationFactor: decimationFactor) {

downsamples.append(contentsOf: audioSamplesDownsamples)

}

}

The array audioSamples now contains all processed samples.

Process Audio

Preparation of the samples for plotting uses five Accelerate methods in the downsample method.

vDSP.convertElements

Since Accelerate methods work with Float or Double, and the audio samples are read as Int16, use vDSP.convertElements to convert the Int16 samples to Double:

var audioSamplesD = [Double](repeating: 0, count: audioSamples.count)

vDSP.convertElements(of: audioSamples, to: &audioSamplesD)

vDSP.absolute

Decibels are computed using a ratio of positive power values, or voltages, related by the power voltage relation P = V2/R. But audio samples are signed (+,-) voltage quantities, so the absolute value is taken with vDSP.absolute:

vDSP.absolute(audioSamplesD, result: &audioSamplesD)

vDSP.convert

vDSP.convert converts the voltage samples to a decibel with Int16.max as the zero reference (decibel value of a zero reference value is 0):

vDSP.convert(amplitude: audioSamplesD, toDecibels: &audioSamplesD, zeroReference: Double(Int16.max))

The amplitude rather than power form of vDSP.convert is used since audio sample values are voltage amplitudes, not power. Since power is proportional to the square of voltage, voltage is said to be a root-power value. See the decibel example.

The maximum audio value, Vref, is 0 decibels:

vDSP.clip

Decibel values are in the range [-∞, 0] since the input values are in the range [0,1]. Avoid -∞ in the values by clipping them to a low value specified by the noiseFloor property.

Clip the values to the range noiseFloor...0 with vDSP.clip:

audioSamplesD = vDSP.clip(audioSamplesD, to: noiseFloor...0)

All audio decibel values are now non-positive values in the range noiseFloor…0. This is important to note for the function PlotAudio that will linearly map these values into the rectangle of the view.

vDSP_desampD

Downsample with vDSP_desampD using the decimationFactor that is computed as the ratio of total audio sample count to the desired sample count. See the downsample example.

var filter = [Double](repeating: 1.0 / Double(decimationFactor), count:decimationFactor)

if antialias == false {

filter = [1.0]

}

let downsamplesLength = Int(audioSamplesD.count / decimationFactor)

var downsamples = [Double](repeating: 0.0, count:downsamplesLength)

vDSP_desampD(audioSamplesD, vDSP_Stride(decimationFactor), filter, &downsamples, vDSP_Length(downsamplesLength), vDSP_Length(filter.count))

The filter averages decimationFactor samples if the antialias property is true.

PlotAudio Function

The processed audio samples audioSamples, which are downsampled decibel values of the original samples clipped to the range noiseFloor…0, are plotted with a Path by PlotAudio.

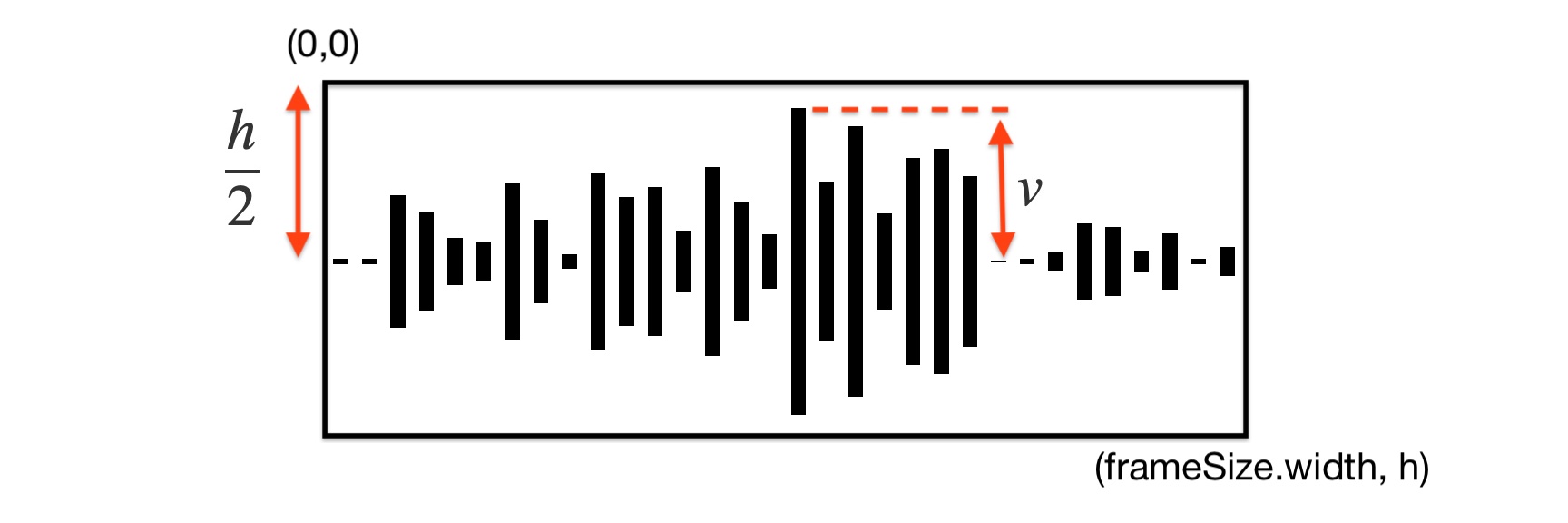

The decibel values in array audioSamples are mapped into the view rectangle with height frameSize.height to produce a symmetric shape relative to the center at noiseFloor, and the height of the bars proportional to the decibels.

The PlotAudioWaveformView has a frame of size frameSize, with a coordinate system where the top left corner is the origin:

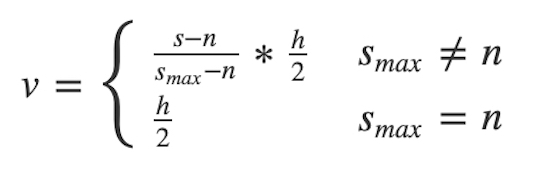

Define:

s = decibel sample value

h = view height

n = noise floor

smax = maximum sample value

Then v is given by the expression:

The following function PlotAudio implements this formula to map processed audio samples into a Path for drawing in the PlotAudioWaveformView.

func PlotAudio(audioSamples:[Double], frameSize:CGSize, noiseFloor:Double) -> Path? {

let sampleCount = audioSamples.count

guard sampleCount > 0 else {

return nil

}

let audioSamplesMaximum = vDSP.maximum(audioSamples)

let midPoint = frameSize.height / 2

let deltaT = frameSize.width / Double(sampleCount)

let audioPath = Path { path in

for i in 0...sampleCount-1 {

var scaledSample = midPoint

if audioSamplesMaximum != noiseFloor {

scaledSample *= (audioSamples[i] - noiseFloor) / (audioSamplesMaximum - noiseFloor)

}

path.move(to: CGPoint(x: deltaT * Double(i), y: midPoint - scaledSample))

path.addLine(to: CGPoint(x: deltaT * Double(i), y: midPoint + scaledSample))

}

}

return audioPath

}

PlotAudioWaveformView

The View that draws the plot of processed audio samples with a Path generated by the PlotAudio Function.

The PlotAudioWaveformView draws the Path prepared with DownsampleAudio and PlotAudio, and the indicator overlay for audio time, called indicatorPercent.

The indicatorPercent is audio time as a percent of the audio duration.

PlotAudioObservable is an ObservedObject of PlotAudioWaveformView and therefore will redraw when published properties change.

The indicator overlay is drawn according to the value of properties indicatorPercent and fillIndicator:

if plotAudioObservable.indicatorPercent > 0 {

if plotAudioObservable.fillIndicator {

Path { path in

path.addRect(CGRect(x: 0, y: 0, width: plotAudioObservable.indicatorPercent * plotAudioObservable.frameSize.width, height: plotAudioObservable.frameSize.height).insetBy(dx: 0, dy: -plotAudioObservable.lineWidth()/2))

}

.fill(paleRed)

}

else {

Path { path in

path.move(to: CGPoint(x: plotAudioObservable.indicatorPercent * plotAudioObservable.frameSize.width, y: -plotAudioObservable.lineWidth()/2))

path.addLine(to: CGPoint(x: plotAudioObservable.indicatorPercent * plotAudioObservable.frameSize.width, y: plotAudioObservable.frameSize.height + plotAudioObservable.lineWidth()/2))

}

.stroke(Color.red, lineWidth: 2)

}

}

A drag gesture is installed on PlotAudioWaveformView so that the indicatorPercent can be changed via dragging and tapping. PlotAudioObservable is updated when the drag changed or ended by calling its methods handleDragEnded and handleDragChanged with the value of indicatorPercent:

.gesture(DragGesture(minimumDistance: 0)

.onChanged({ value in

let percent = min(max(0, value.location.x / plotAudioObservable.frameSize.width), 1)

plotAudioObservable.indicatorPercent = percent

plotAudioObservable.handleDragChanged(value:percent)

})

.onEnded({ value in

let percent = min(max(0, value.location.x / plotAudioObservable.frameSize.width), 1)

plotAudioObservable.indicatorPercent = percent

plotAudioObservable.handleDragEnded(value: percent)

})

PlotAudioObservable has associated protocol PlotAudioDelegate so its delegate plotAudioDelegate can respond to drag events:

protocol PlotAudioDelegate : AnyObject {

func plotAudioDragChanged(_ value: CGFloat)

func plotAudioDragEnded(_ value: CGFloat)

func plotAudioDidFinishPlotting()

}

PlotAudioDelegate is declared AnyObject as required for the PlotAudioObservable weak reference plotAudioDelegate for delegate (only reference types can have weak reference to prevent retain cycle)

The PlotAudioObservable methods handleDragEnded and handleDragChanged notify the plotAudioDelegate with information about the drag, including the value of indicatorPercent:

func handleDragChanged(value:CGFloat) {

plotAudioDelegate?.plotAudioDragChanged(value)

}

func handleDragEnded(value:CGFloat) {

plotAudioDelegate?.plotAudioDragEnded(value)

}

In this app the ControlViewObservable is the PlotAudioDelegate. Conforming to PlotAudioDelegate enables ControlViewObservable to synchronize the audio and video player with the current indicator overlay time.

PlotAudioObservable

The ObservedObject of PlotAudioWaveformView whose properties control the appearance of the audio plot, and calls methods of its PlotAudioDelegate.

PlotAudioObservable has a published property named indicatorPercent that specifies time as a percentage of audio duration. PlotAudioWaveformView uses this value to draw the translucent indicator overlay:

In this app ControlViewObservable implements PlotAudioDelegate to synchronize the indicator overlay of the PlotAudioWaveformView with the audio and video player.

Dragging the indicator overlay can update the time of the players. Conversely, the indicator overlay can be updated as the time of audio or video players change.

Synchronize Plot to Players

PlotAudioObservable updates its delegate with the value of indicatorPercent when it is changed by dragging or tapping the PlotAudioWaveformView.

It does this by calling methods of PlotAudioDelegate when dragging changes or ended, passing the value of indicatorPercent.

ControlViewObservable sets itself as the delegate of PlotAudioObservable in its init:

init(plotAudioObservable:PlotAudioObservable, fileTableObservable: FileTableObservable) {

self.plotAudioObservable = plotAudioObservable

...

self.plotAudioObservable.plotAudioDelegate = self

ControlViewObservable then implements delegate methods plotAudioDragChanged and plotAudioDragEnded to update the audio and video player, synchronizing their times with indicatorPercent received as the function argument:

// PlotAudioDelegate

// set delegate in init!

func plotAudioDragChanged(_ value: CGFloat) {

switch mediaType {

case .audio:

if isPlaying {

audioPlayer.stopPlayingAudio()

}

isPlaying = false

isPaused = false

case .video:

videoPlayer.pause()

isPlaying = false

isPaused = false

videoPlayer.seek(to: CMTimeMakeWithSeconds(value * videoDuration, preferredTimescale: kPreferredTimeScale), toleranceBefore: CMTime.zero, toleranceAfter: CMTime.zero)

}

}

// PlotAudioDelegate

func plotAudioDragEnded(_ value: CGFloat) {

switch mediaType {

case .audio:

isPlaying = true

if let currentFile = fileTableObservable.currentFile {

let _ = audioPlayer.playAudioURL(currentFile.url, percent: value)

}

case .video:

isPlaying = true

videoPlayer.play()

}

}

// PlotAudioDelegate

func plotAudioDidFinishPlotting() {

self.playSelectedMedia()

}

Synchronize Video Player to Plot

Synchronizing the indicator overlay with the video player time is achieved with a periodic time observer and the seek method.

In ControlViewObservable a periodic time observer is added to the video player so that when when the player time changes the time for the indicatorPercent changes as well:

func updatePlayer(_ url:URL) {

let asset = AVAsset(url: url)

videoDuration = asset.duration.seconds

self.videoPlayerItem = AVPlayerItem(asset: asset)

self.videoPlayer.replaceCurrentItem(with: self.videoPlayerItem)

if let periodicTimeObserver = self.videoPlayerPeriodicTimeObserver {

self.videoPlayer.removeTimeObserver(periodicTimeObserver)

}

self.videoPlayerPeriodicTimeObserver = self.videoPlayer.addPeriodicTimeObserver(forInterval: CMTime(value: 1, timescale: 30), queue: nil) { [weak self] cmTime in

if let duration = self?.videoDuration {

self?.plotAudioObservable.indicatorPercent = cmTime.seconds / duration

}

}

}

Synchronize Audio Player To Plot

Synchronizing the indicator overlay with the AudioPlayer is achieved by ControlViewObservable conforming to the AudioPlayerDelegate protocol.

For lack of a periodic time observer, AudioPlayer uses a Timer to keep track of its AVAudioPlayer time, and the timer action calls audioPlayProgress to update its delegate.

Moreover, AudioPlayer is an AVAudioPlayerDelegate and implements audioPlayerDidFinishPlaying to be notified when the AVAudioPlayer has finished playing.

In this way the indicatorPercent can be updated by changes in the audio player time:

// AudioPlayerDelegate

// set delegate in init!

func audioPlayDone(_ player: AudioPlayer?) {

isPlaying = false

isPaused = false

plotAudioObservable.indicatorPercent = 0

}

// AudioPlayerDelegate

func audioPlayProgress(_ player: AudioPlayer?, percent: CGFloat) {

plotAudioObservable.indicatorPercent = percent

}

App Classes

The classes that build an app around the functionality of PlotAudio.

- PlotAudioApp : Creates the observable objects PlotAudioObservable, FileTableObservable and ControlViewObservable that manage the audio plot, file list, players and controls.

- PlotAudioAppView : The main view containing the plot, file list, players and controls.

- FileTableObservable : A manager of the file list that loads and switches between audio and video files.

- ControlViewObservable : Manages updating the plot view from changes to the controls, as well as the synchronization of the plot view with the players.

- AudioPlayer : A wrapper for AVAudioPlayer to provide audio play progress to a delegate, as well as play audio at a specific time.

PlotAudioApp

Creates the PlotAudioAppView and observable objects PlotAudioObservable, FileTableObservable and ControlViewObservable that manage the audio plot, file list, players and controls.

WindowGroup {

PlotAudioAppView(controlViewObservable: ControlViewObservable(plotAudioObservable: PlotAudioObservable(), fileTableObservable: FileTableObservable()))

}

The init also runs the two examples of this document, decibel example and downsample example:

DecimationExample(audioSamples:[3,2,1,2,2,1,7], desiredSize:2)

DecibelExample()

PlotAudioAppView

The main view containing the plot, file list, players and controls, with a StateObject that is a ControlViewObservable. Using a StateObject allows multiple windows to have their own state to play and plot different media files.

@StateObject var controlViewObservable: ControlViewObservable

Initial conditions for the plot’s media source and appearance and are set using the onAppear view modifier.

.onAppear {

controlViewObservable.plotAudioObservable.pathGradient = true

controlViewObservable.plotAudioObservable.barWidth = 3

controlViewObservable.plotAudioObservable.barSpacing = 2

controlViewObservable.fileTableObservable.selectIndex(3)

}

Add and remove files in the Audio Files or Video Files project folders to modify the list of files.

This view displays a list of audio or video files based on the mediaType published property of FileTableObservable with values MediaType:

enum MediaType: String, CaseIterable, Identifiable {

case audio, video

var id: Self { self }

}

A Picker handles media type changes:

Picker("Media Type", selection: $controlViewObservable.fileTableObservable.mediaType) {

ForEach(MediaType.allCases) { type in

Text(type.rawValue.capitalized)

}

}

When an item is selected in the list the PlotAudioWaveformView is updated with the plot of the audio samples of that item.

File Selection

Changes to the selection in the list of files is handled by an onChange modifier for the property selectedFileID of the FileTableObservable. The change action performs setting the published property asset of the PlotAudioObservable:

FileTableView(fileTableObservable: controlViewObservable.fileTableObservable)

.onChange(of: controlViewObservable.fileTableObservable.selectedFileID) { newSelectedFileID in

if newSelectedFileID != nil, let index = controlViewObservable.fileTableObservable.files.firstIndex(where: {$0.id == newSelectedFileID}) {

let fileURL = controlViewObservable.fileTableObservable.files[index].url

controlViewObservable.plotAudioObservable.asset = AVAsset(url: fileURL)

}

}

The change to the asset in PlotAudioObservable is received by sink that calls the method plotAudio, which updates the plot in PlotAudioWaveformView:

$asset.sink { [weak self] _ in

DispatchQueue.main.async {

self?.plotAudio()

}.store(in: &cancelBag)

}

[weak self] is a policy used to prevent retain cycles.

Media Type

Change to the selection of audio or video media type by the Picker is handled by an onChange modifier for the property mediaType of the FileTableObservable. The change action performs setting the published property mediaType of the ControlViewObservable:

.onChange(of: controlViewObservable.fileTableObservable.mediaType) { newMediaType in

controlViewObservable.stopMedia()

controlViewObservable.mediaType = newMediaType

controlViewObservable.fileTableObservable.selectIndex(3)

}

The change to the mediaType in ControlViewObservable is received by sink that calls the PlotAudioObservable method plotAudio, which updates the plot in PlotAudioWaveformView:

$mediaType.sink { [weak self] _ in

DispatchQueue.main.async {

self?.plotAudioObservable.plotAudio()

}

}.store(in: &cancelBag)

[weak self] is a policy used to prevent retain cycles.

FileTableObservable

A manager of the file list that loads and switches between audio and video files.

Files in resource folders Audio Files or Video Files with specific extensions are loaded with loadFiles, based on the value of mediaType, selected by the Picker in PlotAudioAppView:

let kAudioFilesSubdirectory = "Audio Files"

let kVideoFilesSubdirectory = "Video Files"

let kAudioExtensions: [String] = ["aac", "m4a", "aiff", "aif", "wav", "mp3", "caf", "m4r", "flac", "mp4"]

let kVideoExtensions: [String] = ["mov"]

func loadFiles(extensions:[String] = kAudioExtensions, subdirectory:String = kAudioFilesSubdirectory) {

files = []

for ext in extensions {

if let urls = Bundle.main.urls(forResourcesWithExtension: ext, subdirectory: subdirectory) {

for url in urls {

files.append(File(url: url))

}

}

}

files.sort(by: { $0.url.lastPathComponent > $1.url.lastPathComponent })

}

func loadFiles(mediaType:MediaType) {

switch mediaType {

case .audio:

loadFiles(extensions:kAudioExtensions, subdirectory:kAudioFilesSubdirectory)

case .video:

loadFiles(extensions:kVideoExtensions, subdirectory:kVideoFilesSubdirectory)

}

}

When the change in mediaType is received by sink in FileTableObservable the method loadFiles is called:

$mediaType.sink { [weak self] newMediaType in

self?.loadFiles(mediaType: newMediaType)

}.store(in: &cancelBag)

[weak self] is a policy used to prevent retain cycles.

ControlViewObservable

Manages updating the plot view from changes to the controls, as well as the synchronization of the plot view with the players.

In addition to synchronizing the plot view with the players by conforming to the protocols AudioPlayerDelegate and PlotAudioDelegate, ControlViewObservable also handles interaction with audioPlayer and videoPlayer via the play, pause and stop buttons in the ControlView:

func pauseMedia() {

switch mediaType {

case .audio:

audioPlayer.pausePlayingAudio()

case .video:

videoPlayer.pause()

}

isPaused = true

}

func resumeMedia() {

switch mediaType {

case .audio:

audioPlayer.play(percent: plotAudioObservable.indicatorPercent)

case .video:

videoPlayer.play()

}

isPaused = false

}

func stopMedia() {

if isPaused { // prevents delay if audio player paused

resumeMedia()

}

isPlaying = false

isPaused = false

switch mediaType {

case .audio:

audioPlayer.stopPlayingAudio()

case .video:

videoPlayer.pause()

videoPlayer.seek(to: CMTime.zero, toleranceBefore: CMTime.zero, toleranceAfter: CMTime.zero)

}

}

A Slider is used to change the aspect ratio of the PlotAudioWaveformView by setting the frameSize property of PlotAudioObservable, when the change is received by sink:

$aspectFactor.sink { [weak self] _ in

DispatchQueue.main.async {

if self?.originalFrameSize == nil {

self?.originalFrameSize = self?.plotAudioObservable.frameSize

}

if let originalFrameSize = self?.originalFrameSize, let aspectFactor = self?.aspectFactor {

let newHeight = originalFrameSize.height * aspectFactor

self?.plotAudioObservable.frameSize = CGSize(width: originalFrameSize.width, height: newHeight)

}

}

}.store(in: &cancelBag)

[weak self] is a policy used to prevent retain cycles.

AudioPlayer

A wrapper for AVAudioPlayer to provide audio play progress to a delegate, as well as play audio at a specific time.

This class brings the periodic time observer and seek type functionality of the AVPlayer for video to AVAudioPlayer for audio.

Observing Time for Audio Player:

The AudioPlayerDelegate protocol enables AudioPlayer to notify its delegate of audio play progress and when play stopped:

protocol AudioPlayerDelegate: AnyObject { // AnyObject - required for AudioPlayer's weak reference for delegate (only reference types can have weak reference to prevent retain cycle)

func audioPlayProgress(_ player:AudioPlayer?, percent: CGFloat)

func audioPlayDone(_ player:AudioPlayer?)

}

AudioPlayerDelegate is declared AnyObject as required for the AudioPlayer weak reference audioPlayer for delegate (only reference types can have weak reference to prevent retain cycle)

AudioPlayer observes the current time of audioPlayer with a Timer whose action calls audioPlayProgress with that time.

Because AudioPlayer conforms to AVAudioPlayerDelegate it receives notifications when audioPlayer has stopped playing and calls audioPlayDone.

Seek To Time for Audio Player:

The AudioPlayer implements a method playAudioURL that can play audio at a specific time provided as a percent of total audio time.

Observing Time for Audio Player

To observe its AVAudioPlayer play time, AudioPlayer creates a Timer on the main thread with startTimer in its play method:

func startTimer() {

let schedule = {

self.timer = Timer.scheduledTimer(withTimeInterval: 0.01, repeats: true) { [weak self] _ in

if let player = self?.audioPlayer {

let percent = player.currentTime / player.duration

self?.delegate?.audioPlayProgress(self, percent: CGFloat(percent))

}

}

}

if Thread.isMainThread {

schedule()

}

else {

DispatchQueue.main.sync {

schedule()

}

}

}

The action of the Timer notifies its delegate of current audio time by calling audioPlayProgress.

Whenever the play method is called any previous timer is stopped, and a new timer created:

func play(percent:Double) {

guard let player = audioPlayer else { return }

stopTimer()

let delay:TimeInterval = 0.01

let now = player.deviceCurrentTime

let timeToPlay = now + delay

player.currentTime = percent * player.duration

startTimer()

player.play(atTime: timeToPlay)

}

Additionally AudioPlayer is an AVAudioPlayerDelegate and conforms by implementing audioPlayerDidFinishPlaying, where it stops its Timer, and notifies the delegate audio playing stopped by calling audioPlayDone:

func audioPlayerDidFinishPlaying(_ player: AVAudioPlayer, successfully flag: Bool) {

stopTimer()

delegate?.audioPlayDone(self)

}

This way ControlViewObservable, by conforming to the protocol AudioPlayerDelegate, can update the indicator overlay of the PlotAudioWaveformView as the audio is playing and when it stops playing:

// AudioPlayerDelegate

// set delegate in init!

func audioPlayDone(_ player: AudioPlayer?) {

isPlaying = false

isPaused = false

plotAudioObservable.indicatorPercent = 0

}

// AudioPlayerDelegate

func audioPlayProgress(_ player: AudioPlayer?, percent: CGFloat) {

plotAudioObservable.indicatorPercent = percent

}

Seek To Time for Audio Player

To implement the seek analogue for AudioPlayer, note that in play the deviceCurrentTime of AVAudioPlayer is used to start audio play at specific time, with time specified as a percent of the total audio duration:

let delay:TimeInterval = 0.01

let now = player.deviceCurrentTime

let timeToPlay = now + delay

player.currentTime = percent * player.duration

player.play(atTime: timeToPlay)

The function playAudioURL then creates an AVAudioPlayer for the given url and calls play to play the audio at a time specified as a precent of total audio time:

func playAudioURL(_ url:URL, percent:Double = 0) -> Bool {

if FileManager.default.fileExists(atPath: url.path) == false {

Swift.print("There is no audio file to play.")

return false

}

do {

stopTimer()

audioPlayer = try AVAudioPlayer(contentsOf: url)

guard let player = audioPlayer else { return false }

player.delegate = self

player.prepareToPlay()

play(percent: percent)

}

catch let error {

print(error.localizedDescription)

return false

}

return true

}

This way ControlViewObservable, by conforming to the protocol AudioPlayerDelegate, can play the audio of the currently selected file when dragging has ended, at the indicatorPercent time provided:

func plotAudioDragEnded(_ value: CGFloat) {

switch mediaType {

case .audio:

isPlaying = true

if let currentFile = fileTableObservable.currentFile {

let _ = audioPlayer.playAudioURL(currentFile.url, percent: value)

}

case .video:

isPlaying = true

videoPlayer.play()

}

}

ControlViewObservable can also stop audio from playing during dragging using the AudioPlayer method stopPlayingAudio in plotAudioDragChanged:

func plotAudioDragChanged(_ value: CGFloat) {

switch mediaType {

case .audio:

if isPlaying {

audioPlayer.stopPlayingAudio()

}

isPlaying = false

isPaused = false

case .video:

videoPlayer.pause()

isPlaying = false

isPaused = false

videoPlayer.seek(to: CMTimeMakeWithSeconds(value * videoDuration, preferredTimescale: kPreferredTimeScale), toleranceBefore: CMTime.zero, toleranceAfter: CMTime.zero)

}

}